| Feb 03, 2014 |

Robots with insect brains (w/video)

|

|

(Nanowerk News) Autonomous robots that find their way through unfamiliar terrain? Not so distant future. Researchers at the Bernstein Fokus Neuronal Basis of Learning, the Bernstein Center Berlin and the Freie Universität Berlin have developed a robot that perceives environmental stimuli and learns to react to them ("Conditioned behavior in a robot controlled by a spiking neural network").

|

|

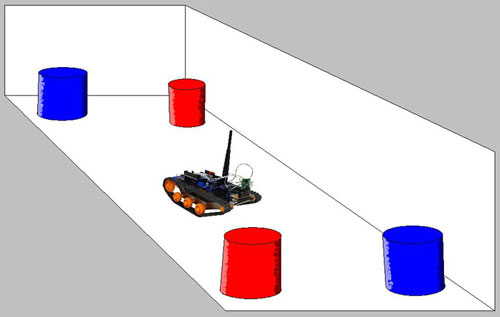

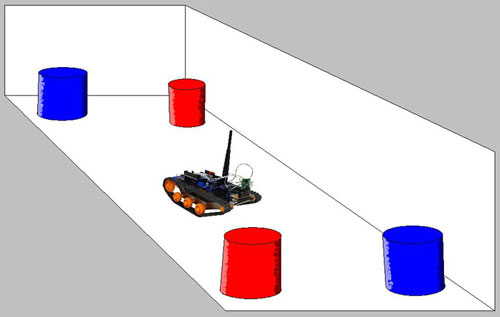

| The robot in the arena. The small camera films the objects and passes the information to the neural network by wifi. The network processes the data and controls the movement direction of the robot.

|

|

The scientists used the relatively simple nervous system of the honeybee as a model for its working principles. To this end, they installed a camera on a small robotic vehicle and connected it to a computer. The computer program replicated in a simplified way the sensorimotor network of the insect brain. The input data came from the camera that—akin to an eye—received and projected visual information. The neural network, in turn, operated the motors of the robot wheels—and could thus control its motion direction.

|

|

The outstanding feature of this artifical mini brain is its ability to learn by simple principles. “The network-controlled robot is able to link certain external stimuli with behavioral rules,” says Professor Martin Paul Nawrot, head of the research team and member of the sub-project „Insect inspired robots: towards an understanding of memory in decision making“ of the Bernstein Focus. “Much like honeybees learn to associate certain flower colors with tasty nectar, the robot learns to approach certain colored objects and to avoid others.”

|

|

In the learning experiment, the scientists located the network-controlled robot in the center of a small arena. Red and blue objects were installed on the walls. Once the robot’s camera focused on an object with the desired color—red, for instance—, the scientists triggered a light flash. This signal activated a so-called reward sensor nerve cell in the artificial network. The simultaneous processing of red color and the reward now led to specific changes in those parts of the network, which exercised control over the robot wheels. As a consequence, when the robot “saw” another red object, it started to move toward it. Blue items, in contrast, made it to move backwards. “Just within seconds, the robot accomplishes the task to find an object in the desired color and to approach it,” explains Nawrot. “Only a single learning trial is needed, similar to experimental observations in honeybees.”

|

|

|

|

A robotic platform designed for implementing and testing spiking neural network control architectures. This video demonstrates a neuromorphic realtime approach to sensory processing, reward-based associative plasticity and behavioral control. This is inspired by the biological mechanisms underlying rapid associative learning and the formation of distributed memories in the insect.

|

|

The current study has been carried out within an interdisciplinary collaboration between Professor Martin Paul Nawot’s research group “Neuroinformatics” (Institut of Biology), and the group “Intelligent Systems and Robotics” (Institute of Computer Science) headed by Raúl Rojas at Freie Universität Berlin. The scientists are now planning to expand their neural network by supplementing more learning principles. Thus, the mini brain will become even more powerful—and the robot more autonomous.

|

|

The Bernstein Focus Neuronal Basis of Learning, sub-project “Insect inspired robots: towards an understanding of memory in decision making” and the Bernstein Center Berlin are part of the National Bernstein Network Computational Neuroscience in Germany. With this funding initiative, the German Federal Ministry of Education and Research (BMBF) has supported the new discipline of Computational Neuroscience since 2004 with more than 170 million Euros. The network is named after the German physiologist Julius Bernstein (1835–1917).

|