| Nov 24, 2015 |

Robots: the curiosity of the body

|

|

(Nanowerk News) Robots could benefit a lot from watching babies, for example how the latter acquire new movements. Children explore the world through play. In the process, they not only discover their environment but also their own bodies.

|

|

As Ralf Der of the Max Planck Institute for Mathematics in the Sciences and Georg Martius of the Institute for Science and Technology in Klosterneuburg, Austria have now shown in simulations with robots, the brain of these operating with artificial neurons does not need a higher-level control center responsible for generating curiosity (PNAS, "Novel plasticity rule can explain the development of sensorimotor intelligence").

|

|

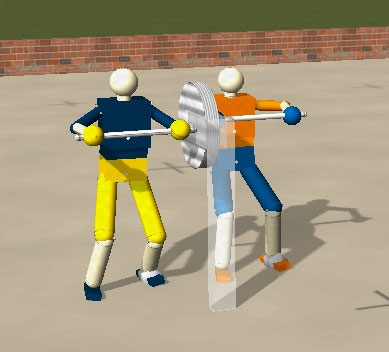

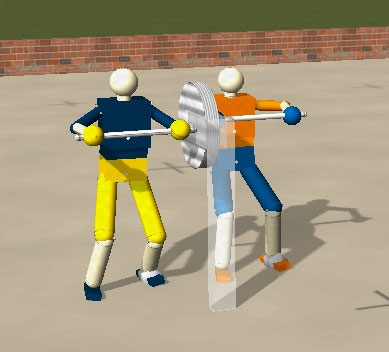

| Coordinated without external coordination: two virtual robots learn to work together to turn a wheel without an external command to coordinate their movements to this end. The two figures are prompted to work together, e.g. to grip a wheel, solely by feedback between motion commands and sensory stimuli that arises from the interaction of the robots’ bodies with the environment. (Image: Ralf Der and Georg Martius)

|

|

Curiosity arises solely from feedback loops between sensors that provide stimuli about interactions of the robot’s body with the environment on the one hand and motion commands on the other. The robot’s control unit generates commands for new movements based purely on sensory signals. From initially small, and even passive movements, the robot develops a motor repertoire without specific higher-level instructions. Until now, robots capable of learning have been given specific goals and have then been rewarded when they achieve them. Or researchers try to program curiosity into the robots.

|

|

“What fires together, wires together”, a rule formulated by Canadian psychologist Donald Hebb, is well known to neuroscientists. This law states that the more often two neurons are active simultaneously, the more likely they are to link together and form complex networks. Hebb’s law is able to explain the formation of memory but not the development of movements. To learn to crawl, grasp or walk, people and adaptive robots need playful curiosity that prompts them to learn new movements. Ralf Der of the Max Planck Institute for Mathematics in the Sciences and Georg Martius, who until recently researched at the same Max Planck Institute and is now continuing his work at the Institute for Science and Technology in Klosterneuburg, Austria, have now demonstrated what many researchers have suspected all along, namely that no higher-level command center is required.

|

|

“We’ve found that robots, at least, can develop motor skills without the need for a specific program for curiosity. This implies augmenting the information in their artificial neural network,” says Georg Martius. Together with Ralf Der, he has formulated a new sensorimotor learning rule, according to which links form in artificial neural networks and possibly also in the brains of babies which allow robots and young children to learn new movements depending on the situation.

|

|

Neuronal networks form when the body and environment interact

|

|

The learning rule is based on a model that mimics the dynamic interaction between three components: the body, the brain and the environment, i.e. in robots an artificial neural network. Initially there are no structures in the brain of the robot that control movements. Only when the body interacts with the environment and the limbs are bent somewhat because they encounter an obstacle do the relevant neural networks form. This lies at the root of sensorimotor learning, the robot learns how to move.

|

|

For the learning process to begin at all, an initial impetus from the outside is required in this model, Martius explains: “First, nothing happens at all. If the system is at rest, the neurons do not receive any signals.“ The researchers therefore triggered a passive sensory stimulus in their robot, for example by leading it around on a virtual thread or simply by letting it sink to the floor so that its trunk, arms or legs are bent.

|

|

Much as in humans, who after a stroke, for example, initially regain control of their arms or legs by passive movements, passive sensory stimuli in the robotic brain give rise to an initial learning signal. Though this is very weak, the sensorimotor control centre uses the command to generate a small, but slightly modified motion that generates a new sensory stimulus, which in turn is converted into a movement. Thus, stimuli and motor commands oscillate to generate a coordinated pattern of movements.

|

|

Feedback between sensor signals and movement produce curiosity

|

|

The robot then practices the movement pattern until it is disturbed. For example, the robot crawls up to an obstacle, after which it develops new movement patterns. One of them will eventually allow it to overcome or bypass the obstacle. “In this way our robots exhibit curiosity. Ultimately, they learn more and more movements,” explains Georg Martius. “However, their curiosity arises solely from feedback between sensory stimuli and movement instructions as their body interacts with the environment.”

|

|

In computer simulations, the researchers applied their rule to simple neural networks of virtual six-footed or humanoid robots that learned to move around in this way. They even acquired movements, thanks to which they were able to cooperate with other robots. For example, after a while two humanoid robots joined forces to turn a wheel in a coordinated manner.

|

|

Martius emphasizes that their system quickly adapts to new situations that are determined by the environment. This is important because “it would be futile to try all possible movements and combinations. They are infinite in number, and that approach would take far too long.” The model therefore does not use random decisions. On the contrary, a specific sensory stimulus is only translated into a single motor command. The same stimulus therefore always gives rise to the same movement. Hence, the robot’s movements are derived directly from its past actions. “However, small changes in the sensory signals can have a large impact on the development of a movement pattern,” says Georg Martius.

|

|

Initial tests with a real robot were promising

|

|

In the long term, the researchers want to combine multiple movement patterns from a large repertoire to allow complex actions. Ralf Der and Georg Martius will first test their learning rules on real robots. Initial experiments with an artificial arm have been promising, as the artificial limb developed the skills of a real arm.

|

|

The experiments confirm that robots and possibly also the human brain need no high-level curiosity centre and no specific commands to develop new movements that they can ultimately use in a practical way. Instead, the required neural networks appear to form solely because neurons that respond to external stimuli in the same way form tighter associations. Ralf Der and Georg Martius have therefore formulated a new rule based on Hebb’s law: “Chaining together what changes together”.

|