| Oct 18, 2018 |

A new interface to simultaneously control various robots

|

|

(Nanowerk News) Researchers from Universidad Politécnica de Madrid have developed a new virtual reality interface that allows a single operator to supervise multi-robots missions.

|

|

Researchers from Robotics & Cybernetics Research Group (RobCib) at Centre for Automation and Robotics (CAR), a joint center between Universidad Politécnica de Madrid (UPM) and Spanish National Research Council (CSIC), have developed a new tool to optimize the workload of robot operators.

|

|

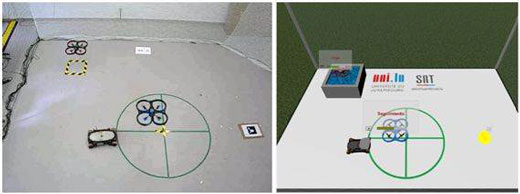

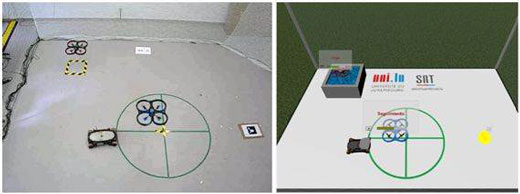

Using virtual reality, this tool can put the operator in the scenario where robots are developing the mission. In this way, the operator can move around and search the best spot to observe and accurately guide the robots while performing their tasks. The results (Sensors, "Multi-Robot Interfaces and Operator Situational Awareness: Study of the Impact of Immersion and Prediction") show that this interface provides operators with a better awareness of the situation and help them to lessen the workload.

|

|

| A mission carried out at the lab on the left and the reality virtual interface on the right. (Image: UPM)

|

|

Robot operators have to deal with the large workload: to process the information of the mission, make decisions and create commands for the robots. Besides, they have to be aware of the situation, understand the scenario from the robot data, and find out where they are and what they are doing at any time.

|

|

The RobCib researcher Juan Jesús Roldán states, “today the missions require more operators than robots: there is an operator to control the motion and another operator to assess the data robot. The goal is to simultaneously control various robots with an only operator”.

|

|

A team of researchers from CAR (UPM-CSIC) have developed and interface of virtual reality to put the operator in the scenario where robots are developing the mission. Besides, the interface uses machine learning techniques to assess the relevance and risk of each robot during the mission like it would do it a human operator. This information is intuitively shown to the operator: for instance, a light that points to the drone while the drone is performing the most critical task.

|

|

Researchers carried out a set of tests to assess the new interface and to compare it with a conventional interface. Thus, a group of operators had to supervise a set of missions with detection drones for firefighting and rescue using both interfaces.

|

|

Results shown that the virtual reality interface provides operators with a better awareness of the situation (they perceived better the routes of the robots and the tasks) and a lower workload (less effort, greater performance and less frustration).

|

|

The next steps of this research will include a greater variety of scenarios (outdoor and indoor) and robots (terrestrial and aerial) and testing of new methods to allow operators to easily and intuitively command the robots. This video shows the interface: at first we see how the events of the missions are reproduced in virtual reality and then we see how an operator deals with the mission.

|

|

This work was carried out within the SAVIER project (Situational Awareness VIrtual EnviRonment) of Airbus and it is a collaboration between Universidad Politécnica de Madrid, where researchers developed the interfaces, and University of Luxembourg, where researchers conducting the tests using drones. Results were published by the journal Sensors.

|