| Sep 14, 2023 | |

How do robots collaborate to achieve consensus? |

|

| (Nanowerk News) Making group decisions is no easy task, especially when the decision makers are a swarm of robots. To increase swarm autonomy in collective perception, a research team at the IRIDIA artificial intelligence research laboratory at the Université Libre de Bruxelles proposed an innovative self-organizing approach in which one robot at a time works temporarily as the “brain” to consolidate information on behalf of the group. | |

| Their paper was published in Intelligent Computing ("Reducing Uncertainty in Collective Perception Using Self-Organizing Hierarchy"). In the paper, the authors showed that their method improves collective perception accuracy by reducing sources of uncertainty. | |

|

|

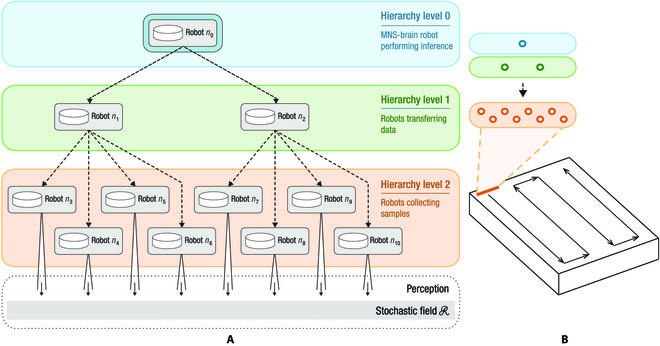

| (a) The roles of robots in the collective perception task according to their hierarchy level. (b) Reactive sweeping motions. (© Intelligent Computing) | |

| By combining aspects of centralized and decentralized control, the authors realized the benefits of both in one system, maintaining the scalability and fault tolerance of decentralized approaches while incorporating the accuracy of centralized ones. The approach enables robots to understand their relative positions within the system and fuse their sensor information at one point without requiring a global or static communication network or any external references. Additionally, the authors’ approach allows centralized methods for fusion of information from multiple sensors to be applied to a self-organized system for the first time. Multi-sensor fusion techniques were previously only demonstrated in fully centralized systems. | |

| The authors tested the self-organizing hierarchy approach against three benchmark approaches and found that their approach excelled in terms of accuracy, consistency and reaction time under the tested conditions. In the experimental setup, a swarm of simulated drones and ground robots collects two-dimensional spatial data by detecting objects scattered in an arena and forms a collective opinion of object density. The robots must rely on their short-range sensors to deduce the number of objects per unit. | |

| This new approach, according to the authors, uses a “dynamic ad-hoc hierarchical network.” It is built on a type of general framework known as a mergeable nervous system, where robots at each level of the hierarchy have different roles in the decision-making processes and robots can change their connections and relative positions as needed, even though each robot is limited to communicating only with its direct neighbors. | |

| In the authors’ approach, the “brain” robot at the top level is responsible for performing inferences and sending motion instructions downstream, while the robots at the middle level manage data transfer and participate in the balancing of global and local motion goals (for instance, during obstacle avoidance), and the majority at the bottom level perform sample collection while managing local motion. | |

| Future research on the topic might investigate advanced inference methods and expand on the robustness of sampling methods under further types of robot failures or challenging environmental conditions, such as environments with large obstacles or irregular boundaries. |

| Source: Intelligent Computing (Note: Content may be edited for style and length) |

We curated a list with the (what we think) 10 best robotics and AI podcasts – check them out!

Also check out our Smartworlder section with articles on smart tech, AI and more.