Artificial Intelligence: A comprehensive Guide

Content

Artificial intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think and learn like humans. These machines can be trained to perform tasks that typically require human intelligence, such as recognizing speech, understanding natural language, making decisions, and playing games. AI can be categorized into several different types, including rule-based systems, expert systems, genetic algorithms, neural networks, and machine learning.

Few terms are as poorly understood as artificial intelligence, which has given rise to so many questions and debates that no singular definition of the field is universally accepted. Arriving at such a definition is further complicated, if not made impossible, by the fact that there isn’t even an unanimously agreed definition of (human) intelligence or what constitutes ‘thinking’.

The rise of ChatGPT, Bard and friends

Recent advancements in large language models (LLMs) and creative AI have demonstrated the vast potential of these technologies across a wide range of applications and domains. LLMs, such as OpenAI's GPT series and Google's Bard, are capable of understanding and generating human-like text based on context and prior knowledge. They have been used (and misused) for applications like content generation, translation, and summarization, and have demonstrated a remarkable ability to grasp complex ideas, engage in conversation, and even answer questions

Creative AI, on the other hand, focuses on the development of algorithms that can generate innovative and aesthetically pleasing output, such as art, music, and poetry. This branch of AI has made significant strides, resulting in AI-generated artwork that has been auctioned at prestigious auction houses, AI-composed music in various genres, and even AI-generated screenplays and novels.

Both LLMs and creative AI have evolved rapidly in recent years, primarily due to advancements in deep learning, an AI subfield that focuses on neural networks with many layers. These neural networks have become increasingly proficient at processing and understanding vast amounts of data, allowing AI systems to learn patterns and generate output that is more accurate and human-like.

However, the rise of LLMs and creative AI has also raised ethical concerns, such as the potential for AI-generated misinformation, biases in the algorithms, and the impact on human employment in creative industries. As a result, researchers and policymakers are working together to establish guidelines and regulations to ensure the responsible development and application of these technologies.

The dichotomy between large language models' anthropomorphic appearance and their fundamentally unhuman-like processing of information is an interesting aspect of AI development. While LLMs are designed to interact with users in a human-like manner, mimicking natural language understanding and conversation, their internal processes are vastly different from the way humans think and comprehend information.

LLMs rely on complex algorithms and statistical models to process and generate text based on patterns learned from vast amounts of data. They do not possess emotions, consciousness, or self-awareness, and their ability to "understand" context is purely based on the associations and patterns they have learned during their training.

This dichotomy can be both fascinating and disconcerting, as it raises questions about the nature of intelligence and what it means to be human. It also highlights the importance of recognizing the limitations of LLMs and being cautious in ascribing human-like qualities to these AI systems, as their underlying mechanisms are fundamentally different from human cognition.

Background, History and Definition of AI

Research into the science of Artificial Intelligence (AI) started in the 1950s, stimulated by the invention of computers. In 1950, Alan Turing published his paper Computing Machinery and Intelligence in which he proposed to consider the question “Can machines think?” and then went on to discuss how to build intelligent machines and how to test their intelligence.

He proposed an imitation game, which later became known as the Turing Test, in which a human evaluator would judge natural language conversations between a human and a machine designed to generate human-like responses. If the evaluator cannot reliably tell the machine from the human, the machine is said to have passed the test. The test results do not depend on the machine's ability to give correct answers to questions, only how closely its answers resemble those a human would give.

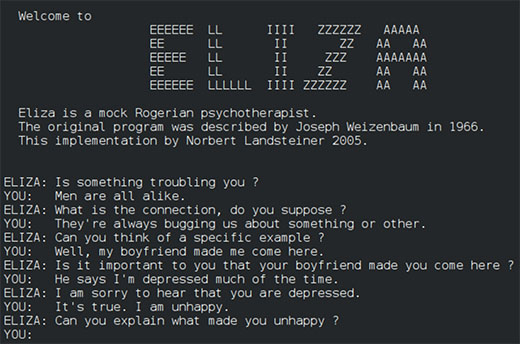

Developed from 1964-1966 by Joseph Weizenbaum at MIT Artificial Intelligence Laboratory, ELIZA, a natural language processing computer program that achieved cult status, was one of the first chatbots and one of the first programs capable of attempting the Turing test.

Where it all began

Move forward six years from Turing’s paper to 1956, the Dartmouth Summer Research Project on Artificial Intelligence took place, widely considered to be the founding event of artificial intelligence as a field. The event was organized by John McCarthy, Marvin Minsky, Nathaniel Rochester and Claude Shannon. In preparing the workshop, John McCarthy actually coined the term Artificial Intelligence.

At that time, the scientists thought that human intelligence can be so precisely described that a machine can be made to simulate it. From the proposal for the workshop: “The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.”

Since then, many different definitions of AI have been proposed.

Early Days

For instance, Marvin Minsky (co-founder of the Massachusetts Institute of Technology's AI laboratory) in his book The Society of Mind defined it as “The field of research concerned with making machines do things that people consider to require intelligence.” He went on to state that there is no clear boundary between psychology and AI because the brain itself is a kind of machine.

In psychology, human intelligence is usually characterized by the combination of many diverse behaviors, not just one trait. This includes abilities to learn, form concepts, understand, apply logic and reason, including the capacities to recognize patterns, plan, innovate, solve problems, make decisions, retain information, and use language to communicate.

Research in AI has focused mainly on the same abilities; or, as Patrick Henry Winston, who succeeded Minsky as director of the MIT Artificial Intelligence Laboratory (now the MIT Computer Science and Artificial Intelligence Laboratory, put it in his book Artificial Intelligence: “AI is the study of the computations that make it possible to perceive, reason and act.”

According to this definition, AI leverages computers and machines to mimic the problem-solving and decision-making capabilities of the human mind.

Knowledge-based Systems

Rather than tackling each problem from scratch, another branch of AI research has sought to embody knowledge in machines: The most efficient way to solve a problem is to already know how to solve it. But simple assumption presents several problems on its own: Discovering how to acquire the knowledge that is needed; learning how to represent it; developing processes that can exploit the knowledge effectively.

This research has led to many practical knowledge-based problem-solving systems. Some of them are called expert systems because they are based on imitating the methods of particular human experts.

In what has become one of the leading textbooks on AI, in 1995 Stuart J. Russell and Peter Norvig published Artificial Intelligence: A Modern Approach, in which they delve into four potential goals or definitions of AI. They differentiate cognitive computer systems on the basis of rationality and thinking vs. acting:

A human approach that deals with thought processes and reasoning:

An ideal approach that deals with behavior:

Alan Turing’s definition would have fallen under the category of “systems that act like humans.”

More recently, Francois Chollet, an AI researcher at Google and creator of the machine-learning software library Keras, argues that intelligence is tied to a system's ability to adapt and improvise in a new environment, to generalize its knowledge and apply it to unfamiliar scenarios: “Intelligence is the efficiency with which you acquire new skills at tasks that you did not previously know about that you did not prepare for… so intelligence is not a skill itself, it's not what you know, it's not what you can do, it's how well and how efficiently you can learn. (watch the full interview with him here on YouTube).

And yet another definition from IBM postulates that “AI is the simulation of human intelligence processes by computers. These processes include learning from constantly changing data, reasoning to make sense of data and self-correction mechanisms to make decisions”.

Methods of AI Research

Early AI research developed into two distinct, and to some extent competing, methods – the top-down (or symbolic) approach, and the bottom-up (or connectionist) approach.

The top-down approach seeks to replicate intelligence by analyzing cognition independent of the biological structure of the brain, in terms of the processing of symbols. In contrast, the bottom-up approach seeks to create artificial neural networks in imitation of the brain’s structure.

Both approaches are facing difficulties: Symbolic techniques work in simplified lab environments but typically break down when confronted with the complexities of the real world; meanwhile, bottom-up researchers have been unable to replicate the nervous systems of even the simplest living things. Caenorhabditis elegans, a much-studied worm, has approximately 300 neurons whose pattern of interconnections is perfectly known. Yet, connectionist models have failed to mimic even this worm (source).

Goals of artificial intelligence – Weak AI versus Strong AI

Artificial Narrow Intelligence

Weak AI, or more fittingly: Artificial Narrow Intelligence (ANI), operates within a limited context and is a simulation of human intelligence. Narrow AI is often focused on performing a single task extremely well and while these machines may seem intelligent, they are operating under far more constraints and limitations than even the most basic human intelligence.

ANI systems are already widely used in commercial systems for instance as personal assistants such as Siri and Alexa, expert medical diagnosis systems, stock-trading systems, Google search, image recognition software, self-driving cars, or IBM's Watson.

Artificial General Intelligence

Strong AI, or Artificial General Intelligence (AGI), is the kind of artificial intelligence we see in the movies, like the robots from Westworld, Ex_Machina, or I, Robot. The ultimate ambition of strong AI is to produce a machine whose overall intellectual ability is indistinguishable from that of a human being. Much like a human being, an AGI system would have a self-aware consciousness that has the ability to solve any problem, learn, and plan for the future.

Superintelligence

And then there is the concept of Artificial Super Intelligence (ASI), or superintelligence, that would surpass the intelligence and ability of the human brain. Superintelligence is still entirely theoretical with no practical examples in use today.

Cognitive Computing

A term you might hear in the context of AI is Cognitive Computing. Quite often the terms are used interchangeably. Although both refer to technologies that can perform and/or augment tasks, help better inform decisions, and create interactions that have traditionally required human intelligence, such as planning, reasoning from partial or uncertain information, and learning, there are differences.

Whereas the goal of AI systems is to solve a problem through the best possible algorithm (and not necessarily as humans would do it), cognitive systems mimic human behavior and reasoning to solve complex problems.

The Four Types of AI

Arend Hintze, Professor for Artificial Intelligence, Department of Complex Dynamical Systems and Microdata, at Michigan State University, goes beyond just weak and strong AI and classifies four types of artificial intelligence: reactive machines, limited memory, theory of mind and self-awareness

Type 1 AI: Reactive machines

The most basic types of AI systems are purely reactive and have the ability neither to form memories nor to use past experiences to inform current decisions. Deep Blue, IBM’s chess-playing supercomputer, which beat international grandmaster Garry Kasparov in the late 1990s, is the perfect example of this type of machine.

Deep Blue can identify the pieces on a chess board and know how each moves. It can make predictions about what moves might be next for it and its opponent. And it can choose the most optimal moves from among the possibilities.

But it doesn’t have any concept of the past, nor any memory of what has happened before. Apart from a rarely used chess-specific rule against repeating the same move three times, Deep Blue ignores everything before the present moment. All it does is look at the pieces on the chess board as it stands right now, and choose from possible next moves.

This type of intelligence involves the computer perceiving the world directly and acting on what it sees. It doesn’t rely on an internal concept of the world. In a seminal paper, AI researcher Rodney Brooks argued that we should only build machines like this. His main reason was that people are not very good at programming accurate simulated worlds for computers to use, what is called in AI scholarship a “representation” of the world.

The current intelligent machines we marvel at either have no such concept of the world, or have a very limited and specialized one for its particular duties. The innovation in Deep Blue’s design was not to broaden the range of possible movies the computer considered. Rather, the developers found a way to narrow its view, to stop pursuing some potential future moves, based on how it rated their outcome. Without this ability, Deep Blue would have needed to be an even more powerful computer to actually beat Kasparov.

Similarly, Google’s AlphaGo, which has beaten top human Go experts, can’t evaluate all potential future moves either. Its analysis method is more sophisticated than Deep Blue’s, using a neural network to evaluate game developments.

These methods do improve the ability of AI systems to play specific games better, but they can’t be easily changed or applied to other situations. These computerized imaginations have no concept of the wider world – meaning they can’t function beyond the specific tasks they’re assigned and are easily fooled.

They can’t interactively participate in the world, the way we imagine AI systems one day might. Instead, these machines will behave exactly the same way every time they encounter the same situation. This can be very good for ensuring an AI system is trustworthy: You want your autonomous car to be a reliable driver. But it’s bad if we want machines to truly engage with, and respond to, the world. These simplest AI systems won’t ever be bored, or interested, or sad.

Type 2 AI: Limited memory

This Type 2 class contains machines can look into the past. Self-driving cars do some of this already. For example, they observe other cars’ speed and direction. That can’t be done in a just one moment, but rather requires identifying specific objects and monitoring them over time.

These observations are added to the self-driving cars’ preprogrammed representations of the world, which also include lane markings, traffic lights and other important elements, like curves in the road. They’re included when the car decides when to change lanes, to avoid cutting off another driver or being hit by a nearby car.

But these simple pieces of information about the past are only transient. They aren’t saved as part of the car’s library of experience it can learn from, the way human drivers compile experience over years behind the wheel.

So how can we build AI systems that build full representations, remember their experiences and learn how to handle new situations? Brooks was right in that it is very difficult to do this. My own research into methods inspired by Darwinian evolution can start to make up for human shortcomings by letting the machines build their own representations.

Type 3 AI: Theory of mind

We might stop here, and call this point the important divide between the machines we have and the machines we will build in the future. However, it is better to be more specific to discuss the types of representations machines need to form, and what they need to be about.

Machines in the next, more advanced, class not only form representations about the world, but also about other agents or entities in the world. In psychology, this is called “theory of mind” – the understanding that people, creatures and objects in the world can have thoughts and emotions that affect their own behavior.

This is crucial to how we humans formed societies, because they allowed us to have social interactions. Without understanding each other’s motives and intentions, and without taking into account what somebody else knows either about me or the environment, working together is at best difficult, at worst impossible.

If AI systems are indeed ever to walk among us, they’ll have to be able to understand that each of us has thoughts and feelings and expectations for how we’ll be treated. And they’ll have to adjust their behavior accordingly.

Type 4 AI: Self-awareness

The final step of AI development is to build systems that can form representations about themselves. Ultimately, we AI researchers will have to not only understand consciousness, but build machines that have it.

This is, in a sense, an extension of the “theory of mind” possessed by Type III artificial intelligences. Consciousness is also called “self-awareness” for a reason. (“I want that item” is a very different statement from “I know I want that item.”) Conscious beings are aware of themselves, know about their internal states, and are able to predict feelings of others. We assume someone honking behind us in traffic is angry or impatient, because that’s how we feel when we honk at others. Without a theory of mind, we could not make those sorts of inferences.

While we are probably far from creating machines that are self-aware, we should focus our efforts toward understanding memory, learning and the ability to base decisions on past experiences. This is an important step to understand human intelligence on its own. And it is crucial if we want to design or evolve machines that are more than exceptional at classifying what they see in front of them.

Subsets of Artificial Intelligence

The cognitive technologies that contribute to various AI applications can broadly be summarized in several categories you might have heard about. Let’s explain them one by one:

What are Expert Systems?

These systems gain knowledge about a specific subject and can solve problems as accurately as a human expert on this subject. Expert systems were among the first truly successful forms of AI software. An expert system is divided into two subsystems: the inference engine and the knowledge base. The knowledge base represents facts and rules. The inference engine applies the rules to the known facts to deduce new facts. Inference engines can also include explanation and debugging abilities.

What is Fuzzy Logic?

Fuzzy logic is an approach to computing based on ‘degrees of truth’ rather than the usual ‘true or false’ (1 or 0) Boolean logic on which modern computers are based. This standard logic with only two truth values, true and false, makes vague attributes or situations difficult to characterize. Human experts often use rules that contain vague expressions, and so it is useful for an expert system’s inference engine to employ fuzzy logic. AI systems therefore use fuzzy logic to imitate human reasoning and cognition.

Rather than strictly binary cases of truth, fuzzy logic includes 0 and 1 as extreme cases of truth but with various intermediate degrees of truth. IBM's Watson supercomputer is one of the most prominent examples of how variations of fuzzy logic and fuzzy semantics are used.

What is Machine Learning?

The ability of statistical models to develop capabilities and improve their performance overtime without the need to follow explicitly programmed instructions. Machine learning enables computers to self-learn from information and apply that learning without human intercession. This is particularly helpful in situations where a solution is covered up in a huge data set (for instance in weather forecasting or climate modelling) – an area described by the term Big Data.

What are Deep Learning Neural Networks?

A complex form of machine learning involving neural networks, with many layers of abstract variables. Neural networks are a subset of machine learning and are at the heart of deep learning algorithms. Their name and structure are inspired by the human brain, mimicking the way that biological neurons signal to one another. Neural networks are a series of algorithms that mimic the operations of a human brain to recognize relationships between vast amounts of data. For instance, in financial services, They are used in a variety of applications from forecasting and marketing research to fraud detection and risk assessment.

What is Robotic Process Automation (RPA)?

This is software technology that automates repetitive, rules-based processes usually performed by people sitting in front of computers. RPA makes it easy to build, deploy, and manage software robots that emulate humans’ actions interacting with digital systems and software. Just like people, software robots can do things like understand what’s on a screen, complete the right keystrokes, navigate systems, identify and extract data, and perform a wide range of defined actions. By interacting with applications just as humans would, software robots can open email attachments, complete e-forms, record and re-key data, and perform other tasks that mimic human action. But software robots can do it faster and more consistently than people.

What is Natural Language Processing (NLP)?

Natural language processing allows machines to understand and respond to text or voice data in much the same way humans do. This enables conversational interaction between humans and computers. This requires the ability to extract or generate meaning and intent from text in a readable, stylistically natural, and grammatically correct form. NLP drives computer programs that translate text from one language to another, respond to spoken commands, and summarize large volumes of text rapidly - even in real time. In daily life you are already interacting with NLP in the form of voice-operated GPS systems, digital assistants, speech-to-text dictation software, or customer service chatbots.

What is Speech Recognition?

The ability to automatically and accurately recognize and transcribe human speech. It uses natural language processing (NLP) to process human speech into a written format. Many mobile devices incorporate speech recognition into their systems to conduct voice search – e.g., Siri or Alexa – or provide more accessibility around texting.

What is Computer Vision?

The ability to extract meaning and intent out of visual elements, whether characters (in the case of document digitization), or the categorization of content in images such as faces, objects, scenes, and activities. You see this working in Google’s image search service. Powered by convolutional neural networks, computer vision has applications within photo tagging in social media, radiology imaging in healthcare, and self-driving cars’ ability to recognize obstacles.

Examples of Artificial Intelligence

Artificial intelligence has made its way into a wide variety of markets. Here are just a few of the more prominent examples:

Artificial Intelligence in the Transportation Industry

AI is used in the transportation industry to improve efficiency, safety, and customer experience. One way it is used is through autonomous vehicles such as self-driving cars and drones, which are able to navigate and make decisions on their own. This technology can improve safety by reducing human error and can also increase efficiency by allowing for more efficient routes and reducing traffic congestion. Additionally, AI-powered systems can be used in traffic management to optimize traffic flow and reduce travel time.

Another way AI is used in the transportation industry is through predictive maintenance, where AI-powered systems analyze sensor data to predict when maintenance is needed on vehicles or equipment, reducing downtime. AI can also be used in logistics to optimize routes and delivery schedules, and to predict demand for transportation services.

Furthermore, AI can assist in customer service, such as through virtual assistants that provide information about routes, schedules, and delays, and can also help with ticket booking and other tasks. Overall, the use of AI in the transportation industry can lead to improved safety, efficiency, and customer experience.

Artificial Intelligence in Healthcare

Artificial intelligence in healthcare mimics human cognition in the analysis, presentation, and comprehension of complex medical and health care data. Specifically, AI is the ability of computer algorithms to approximate conclusions based solely on input data. The primary aim of health-related AI applications is to analyze relationships between prevention or treatment techniques and patient outcomes. AI programs are applied to practices such as diagnosis processes, treatment protocol development, drug development, personalized medicine, and patient monitoring and care.

For instance, IBM’s Watson understands natural language and can respond to questions asked of it. The system mines patient data and other available data sources to form a hypothesis, which it then presents with a confidence scoring schema. Scientists in Japan reportedly saved a woman’s life by applying Watson to help them diagnose a rare form of cancer. Faced with a 60-year-old woman whose cancer diagnosis was unresponsive to treatment, they supplied Watson with 20 million clinical oncology studies, and it diagnosed the rare leukemia that had stumped the clinicians in just ten minutes.

Other AI applications include using online virtual health assistants and chatbots to help patients and healthcare customers find medical information, schedule appointments, understand the billing process and complete other administrative processes. An array of AI technologies is also being used to predict, fight and understand pandemics such as COVID-19.

Artificial Intelligence in Finance and Banking

AI is utilized in banking and finance in several ways to improve operations and customer service. One way is through fraud detection, where AI-powered systems analyze large amounts of data to detect unusual activity or suspicious transactions, helping to prevent fraud. Another way is through risk management, where AI can be used to analyze market and economic data to help financial institutions identify and manage risks.

AI is also used in customer service through chatbots and virtual assistants that can provide assistance with account information, transactions, and other issues. Furthermore, AI-powered systems can be used to analyze customer data to create more personalized and targeted marketing campaigns.

AI can also be used in financial forecasting by analyzing historical data to predict future financial trends and assist in investment decisions. Additionally, AI-powered algorithms can be used to make decisions in trading, such as high-frequency trading. Overall, the use of AI in banking and finance can lead to improved security, better risk management, and enhanced customer service.

Artificial Intelligence in Manufacturing

Manufacturing has been at the forefront of incorporating smart technologies into the workflow. The applications of AI in the field of manufacturing are widespread and range from failure prediction and predictive maintenance to quality assessment, inventory management and pricing decisions.

AI is utilized in manufacturing in various ways to increase efficiency, reduce costs, and improve product quality. One way is through predictive maintenance, where AI-powered systems analyze sensor data from equipment to predict when maintenance is needed, reducing downtime. Another way is through quality control, where AI-powered image recognition and machine learning algorithms can inspect products for defects quickly and accurately.

Automation is also implemented through AI by controlling and optimizing industrial robots for more precise manufacturing processes. AI is also used in supply chain optimization to optimize inventory management, logistics and production scheduling. Predictive modeling is also used where AI-powered systems predict demand for products so that manufacturers can optimize production and inventory levels.

Intelligent process control can also be implemented by using AI-powered systems to optimize process control in manufacturing, for example in chemical plants or oil refineries. Additionally, AI-powered systems can be used to control and optimize the usage of tools and machines in the manufacturing process, which is called Intelligent tooling.

Artificial Intelligence in Law and Legal Services

AI is being utilized in the legal industry to improve efficiency, accuracy, and cost-effectiveness in various tasks. One way is through legal research, where AI-powered systems can quickly analyze large amounts of legal documents and case law to assist lawyers in identifying relevant information. Another way is through contract review and management, where AI can be used to identify and extract key information from contracts, reducing the time and effort required for manual review. AI can also assist in document management, by automatically organizing and categorizing legal documents and making them easily searchable.

Additionally, AI-powered systems can assist in e-discovery, which is the process of identifying and producing electronically stored information relevant to legal matters. AI can also be used in legal analytics and predictions, where it can be used to analyze large amounts of legal data to identify patterns and predict outcomes of legal cases, this can be beneficial for litigators, policy makers, and legal researchers.

Furthermore, AI can be used in the field of legal education, where it can be used to create virtual mentors, tutors and legal assistants to help students understand legal concepts and prepare for legal practice.

Overall, the use of AI in law and legal services can lead to improved efficiency, accuracy and cost-effectiveness in legal research, contract review, document management, e-discovery, legal analytics, legal education and other tasks.

Artificial Intelligence in the Military

One of the primary ways in which AI is used in the military is in autonomous systems for intelligence, surveillance, and reconnaissance. These systems use AI algorithms to process and analyze data from sensors and cameras, such as images, videos, and audio recordings. This information is then used to identify and track potential threats, such as enemy vehicles or personnel, and to provide real-time intelligence to military commanders.

A highly controversial topic is the use of AI in military "killer robots," also known as Lethal Autonomous Weapons Systems (LAWS), for target identification, decision-making, and control of weapons systems. These systems use AI algorithms to process data from sensors and cameras, such as images and videos, to identify and track potential targets. Once a target is identified, the AI system can use its decision-making capabilities to determine whether to engage the target, based on pre-programmed rules and constraints. The AI system can then control the weapon system to engage the target, without human intervention.

The use of AI in military killer robots raises ethical and legal questions, as the decision to take a human life should be made by a human, not by a machine. Some experts argue that killer robots lack the human judgement and empathy required to be responsible for the life and death decisions and can lead to unintended consequences such as collateral damage and escalation of conflicts. There are ongoing debates and discussions among governments, military, and civil society about the development and deployment of such systems, and the need for international regulations to govern their use.

AI-powered systems can also be used for target tracking, navigation, and path planning, which can help to improve the effectiveness of military operations. Additionally, AI-powered drones and other unmanned systems can be used for reconnaissance missions in areas that are too dangerous for human soldiers to enter, such as war zones or disaster areas. This can help to reduce the risk to human lives and also provide valuable information that can help to guide military operations.

AI is used in the military in a variety of other ways as well, including in decision-making and planning systems; in training and simulation systems; and in the control of unmanned systems such as drones. These systems can help to improve the efficiency and effectiveness of military operations, and can also be used to reduce the risk to human soldiers. Additionally, AI can be used in logistics and supply chain management, and in the analysis of large amounts of data for intelligence purposes.

Artificial Intelligence in Cybersecurity

AI and machine learning have become essential to information security, as these technologies are capable of swiftly analyzing millions of data sets and tracking down a wide variety of cyber threats – from malware to shady behavior that might result in a phishing attack.

AI is used in cybersecurity in a variety of ways to help protect networks and systems from cyber attacks. One of the main ways is through the use of machine learning algorithms to analyze large amounts of data, such as network traffic or log files, in order to identify and detect potential threats. For example, AI-based systems can be used to detect and block malicious traffic, such as malware or phishing attempts, by recognizing patterns and anomalies in the data.

Another way AI is used in cybersecurity is through the development of autonomous systems that can respond to cyber attacks in real-time. These systems use AI algorithms to analyze data from sensors and cameras, such as images and videos, to identify and track potential threats. Once a threat is identified, the AI system can use its decision-making capabilities to determine an appropriate response, such as blocking the attack or quarantining the affected system.

Additionally, AI can be used in the field of penetration testing, which is the practice of attempting to infiltrate a computer system, network, web application or other computing resource on behalf of its owners, in order to assess the security of the said systems. AI can be used to create realistic scenarios and simulate the actions of hackers, in order to test the security of a system and identify vulnerabilities that need to be addressed.

In summary, AI is used in cybersecurity to improve the efficiency and effectiveness of security operations, by automating tasks such as threat detection, incident response and penetration testing, as well as providing valuable insights into the nature of cyber threats and vulnerabilities.

Artificial Intelligence in Nanotechnology

Examples of the use and application of artificial intelligence tools in nanotechnology research include: In scanning probe microscopy, researchers have developed an approach called functional recognition imaging (FR-SPM), which looks for direct recognition of local behaviors from measured spectroscopic responses using neural networks trained on examples provided by an expert. This technique combines the use of artificial neural networks (ANNs) with principal component analysis, which is used to simplify the input data to the neural network by whitening and decorrelating the data, reducing the number of independent variables.

Characterization of the structural properties of nanomaterials has also been solved by the use of ANNs. For example, these algorithms have been employed to determine the morphology of carbon nanotube turfs by quantifying structural properties such as alignment and curvature.

ANNs have been used to explore the nonlinear relationship between input variables and output responses in the deposition process of transparent conductive oxide.

See more examples here.

AI Programming Languages and Programming Frameworks

Today’s AI uses conventional CMOS hardware and the same basic algorithmic functions that drive traditional software. Future generations of AI are expected to inspire new types of brain-inspired circuits and architectures that can make data-driven decisions faster and more accurately than a human being can.

AI programming differs quite a bit from standard software engineering approaches where programming usually starts from a detailed formal specification. In AI programming, the implementation effort is actually part of the problem specification process.

The programming languages that are used to build AI and machine learning applications vary. Each application has its own constraints and requirements, and some languages are better than others in particular problem domains. Languages have also been created and have evolved based on the unique requirements of AI applications.

Due to the fuzzy nature of many AI problems, AI programming benefits considerably if the programming language frees the programmer from the constraints of too many technical constructions (e.g., low-level construction of new data types, manual allocation of memory). Rather, a declarative programming style is more convenient using built-in high-level data structures (e.g., lists or trees) and operations (e.g., pattern matching) so that symbolic computation is supported on a much more abstract level than would be possible with standard imperative languages.

From the requirements of symbolic computation and AI programming, two basic programming paradigms emerged initially as alternatives to the imperative style: the functional and the logical programming style. Both are based on mathematical formalisms, namely recursive function theory and formal logic.

Functional Programming Style

The first practical and still most widely used AI programming language is the functional language Lisp (Lisp stands for List processor), developed by John McCarthy in the late 1950s. Lisp is based on mathematical function theory and the lambda abstraction.

Beside Lisp a number of alternative functional programming languages have been developed, in particular ML (which stands for Meta-Language) and Haskell.

Programming in a functional language consists of building function definitions and using the computer to evaluate expressions, i.e., function application with concrete arguments. The major programming task is then to construct a function for a specific problem by combining previously defined functions according to mathematical principles. The main task of the computer is to evaluate function calls and to print the resulting function values.

Logical Programming Style

During the early 1970s, a new programming paradigm appeared, namely logic programming on the basis of predicate calculus. The first and still most important logic programming language is Prolog (an acronym for Programming in Logic), developed by Alain Colmerauer, Robert Kowalski and Phillippe Roussel. Problems in Prolog are stated as facts, axioms and logical rules for deducing new facts.

Programming in Prolog consists of the specification of facts about objects and their relationships, and rules specifying their logical relationships. Prolog programs are declarative collections of statements about a problem because they do not specify how a result is to be computed but rather define what the logical structure of a result should be. This is quite different from imperative and even functional programming, in which the focus is on defining how a result is to be computed. Using Prolog, programming can be done at a very abstract level quite close to the formal specification of a problem.

Object–oriented Languages

Object–oriented languages belong to another well–known programming paradigm. In such languages the primary means for specifying problems is to specify abstract data structures also called objects or classes. A class consists of a data structure together with its main operations often called methods. An important characteristic is that it is possible to arrange classes in a hierarchy consisting of classes and subclasses. Popular object–oriented languages are Eiffel, C++ and Java.

As new languages are being developed and applied to AI problems, the workhorses of AI (LISP and Prolog) continue to being used extensively. For instance, the IBM team that programmed Watson used Prolog to parse natural-language questions into new facts that could be used by Watson.

More recent programming languages that have found applications in AI:

Python

Python is a general-purpose interpreted language that includes features from many languages (such as object-oriented features and functional features inspired by LISP). What makes Python useful in the development of intelligent applications is the many modules available outside the language. These modules cover machine learning (scikit-learn, Numpy), natural language and text processing (NLTK), and many neural network libraries that cover a broad range of topologies.

Java

Java was developed in early 1990s and it can easily be coded, it is highly scalable – making it desirable for AI projects. It is also portable and can easily be implemented on different platforms since it uses virtual machine technology.

C++

The C language has been around for a long time but still continues to be relevant. In 1996, IBM developed the smartest and fastest chess-playing program in the world, called Deep Blue. Deep Blue was written in C and was capable of evaluating 200 million positions per second. In 1997, Deep Blue became the first chess AI to defeat a chess grandmaster. C++ is an extension of C and has object-oriented, generic, and functional features in addition to facilities for low-level memory manipulation.

R

The R language (and the software environment in which to use it) follows the Python model. R is an open-source environment for statistical programming and data mining, developed in the C language. Because a considerable amount of modern machine learning is statistical in nature, R is a useful language that has grown in popularity since its stable release in 2000. R includes a large set of libraries that cover various techniques; it also includes the ability to extend the language with new features.

Julia

Julia is one of the newer languages on the list and was created to focus on performance computing in scientific and technical fields. Julia includes several features that directly apply to AI programming. While it is a general-purpose language and can be used to write any application, many of its features are well suited for numerical analysis and computational science.

Scala

Scala is a general-purpose language that blends object-oriented and functional programming styles into a concise machine learning programming language. Designed to be concise, many of Scala's design decisions are aimed to address criticisms of Java.

AI Frameworks and Libraries

There are numberous tools out – AI frameworks and AI libraries – out there that make the creation of AI applications such deep learning, neural networks and natural language processing applications easier and faster.

These are open source collections of AI components, machine learning algorithms and solutions that perform specific, well-defined operations for common use cases to allow rapid prototyping.

Caffee

Caffe (which stands for (Convolutional Architecture for Fast Feature Embedding) is a deep learning framework made with expression, speed, and modularity in mind. It is open source, written in C++, with a Python interface.

Scikit-learn

Scikit-learn, initially started in 2007 as a Google Summer of Code project, is a free software machine learning library for the Python programming language.

Google Cloud AutoML

AutoML enables developers with limited machine learning expertise to train high-quality models specific to their business needs. AutoML offers a free trial but otherwise offers a pay-as-you-go pricing.

Amazon Machine Learning

Amazon offers a large set of AI and machine learning services and tools.

TensorFlow

TensorFlow is a free and open-source software library developed by the Google Brain team for machine learning and artificial intelligence. .It still is used for both research and production at Google.

Tensorflow is a symbolic math library based on dataflow and differentiable programming. It can be used across a range of tasks but has a particular focus on training and inference of deep neural networks.

It offers a highly capable framework for executing the numerical computations needed for machine learning (including deep learning). On top of that, the framework provides APIs for most major languages, including Python, C, C++, Java and Rust.

TensorFlow manipulates and connects data sets using multidimensional arrays (called tensors) and converts data flow graphs into mathematical operations (referred to as nodes). Programmers can rely on an object-oriented language like Python to treat those tensors and nodes as objects, coupling them to build the foundations for machine learning operations.

Keras

Keras is an open-source software library that provides a Python interface for artificial neural networks. Keras acts as an interface for the TensorFlow library.

Keras features a plug-and-play framework that programmers can use to build deep learning neural network models, even if they are unfamiliar with the specific tensor algebra and numerical techniques.

PyTorch

PyTorch is an open-source machine learning library based on the Torch library (a scientific computing framework for creating deep learning algorithms), used for applications such as computer vision and natural language processing, primarily developed by Facebook's AI Research lab. It is free and open-source software using the C++ interface.

PyTorch is a direct competitor to TensorFlow. In particular, writing optimized code in PyTorch is somewhat easier than in TensorFlow, mainly due to its comprehensive documentation, dynamic graph computations and support for parallel processing.

Apache MXNet

Apache MXNet is an open-source deep learning software framework, used to train, and deploy deep neural networks. It offers features similar to TensorFlow and PyTorch but goes further by providing distributed training for deep learning models across multiple machines.

It is scalable, allowing for fast model training, and supports a flexible programming model and multiple programming languages (including C++, Python, Java, Julia, Matlab, JavaScript, Go, R, Scala, Perl, and Wolfram Language.)

Frequently Asked Questions on Artificial Intelligence

1. What is Artificial Intelligence (AI)?

AI is the field of computer science dedicated to solving cognitive problems commonly associated with human intelligence, such as learning, problem solving, and pattern recognition. AI is an interdisciplinary science with multiple approaches, but advancements in machine learning and deep learning are creating a paradigm shift in virtually every sector of the tech industry.

2. What are the types of Artificial Intelligence?

AI can be classified into two main types: Narrow AI, which is designed to perform a narrow task (like facial recognition or voice commands), and General AI, which is AI that has all the cognitive abilities of a human being.

3. How does AI work?

At a high level, AI works by combining large amounts of data with fast, iterative processing and intelligent algorithms. This allows the software to learn automatically from patterns or features in the data. AI is a broad field, and different AI models work differently.

4. What are the applications of AI?

AI has a wide range of applications including, but not limited to, healthcare, finance, education, transportation, and more. It can be used for anything from diagnosing diseases to automated customer service.

5. What is Machine Learning (ML)?

Machine Learning is a subset of AI that involves the practice of using algorithms to parse data, learn from it, and then make predictions or decisions about something in the world. So instead of hand-coding software routines with a specific set of instructions to accomplish a particular task, the machine is “trained” using large amounts of data and algorithms to learn how to perform the task.

6. What are the types of Machine Learning?

The three main types of machine learning are supervised learning, unsupervised learning, and reinforcement learning. Supervised learning involves learning from labeled data, unsupervised learning involves learning from unlabeled data, and reinforcement learning involves learning from reward-based feedback.

7. What is Deep Learning?

Deep Learning is a subset of Machine Learning where artificial neural networks, algorithms inspired by the human brain, learn from large amounts of data. Similar to how we learn from experience, the deep learning algorithm would perform a task repeatedly, tweaking it a little every time to improve the outcome.

8. How is AI changing the business landscape?

AI is transforming the business landscape by enabling companies to enhance their capabilities, automate processes, and engage with customers in new ways. From personalized marketing campaigns to efficient supply chain management, AI is enabling new possibilities and creating competitive advantages for businesses.

9. What are the challenges faced in implementing AI?

Implementing AI involves several challenges including data privacy concerns, lack of skilled AI professionals, need for large amounts of data for training AI systems, issues of trust in AI decisions, and potential job displacement due to automation.

10. What is the future of Artificial Intelligence?

While it's hard to predict the future accurately, AI is likely to be a key component in many aspects of everyday life. Some areas where it will continue to have an impact include automation of jobs, advancement in healthcare, improvements in data analysis, and enhancements in personal assistants.

11. What is the impact of AI on society?

AI has the potential to greatly improve many aspects of society, including healthcare, education, and transportation. However, it also presents challenges, including ethical questions, job automation, and privacy concerns.

12. What are the ethical considerations in AI?

The ethical considerations in AI include privacy, bias, transparency, and job displacement. Ensuring AI is used responsibly to benefit all and not to harm is a major field of study in itself.

13. What are large language models in the context of AI?

Large language models like OpenAI's ChatGPT are a type of AI that uses machine learning, particularly deep learning, to generate human-like text. These models are trained on a large amount of text data and can answer questions, write essays, summarize texts, translate languages, and even generate poetry.

14. How can businesses leverage AI?

Businesses can leverage AI in a multitude of ways. It can be used to automate tasks, analyze large amounts of data, improve decision-making, enhance customer service, streamline operations, and much more. The key is to identify the areas where AI can have the most impact and strategically implement it.

15. How can one learn more about AI?

There are many resources available for learning more about AI. These include online courses, textbooks, academic journals, conferences, and webinars. Some well-known platforms for online courses include Coursera, edX, and Udacity. It's also recommended to stay updated with latest AI news through tech news websites and forums.

Check out our SmartWorlder section to read more about smart technologies.