| Jul 12, 2018 |

Reducing the data demands of smart machines

|

|

(Nanowerk News) Machine learning (ML) systems today learn by example, ingesting tons of data that has been individually labeled by human analysts to generate a desired output. As these systems have progressed, deep neural networks (DNN) have emerged as the state of the art in ML models. DNN are capable of powering tasks like machine translation and speech or object recognition with a much higher degree of accuracy.

|

|

However, training DNN requires massive amounts of labeled data–typically 109 or 1010 training examples. The process of amassing and labeling this mountain of information is costly and time consuming.

|

|

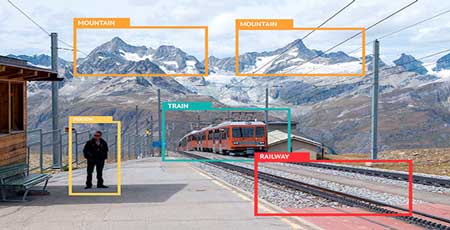

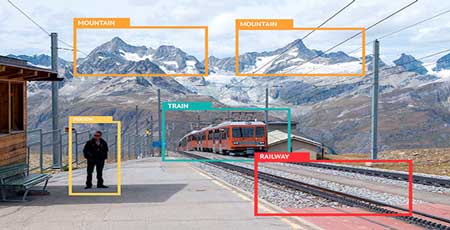

| Machine learning systems today learn by example, ingesting tons of data that has been individually labeled by human analysts to generate a desired output. The goal of the LwLL program is to make the process of training machine learning models more efficient by reducing the amount of labeled data required to build a model by six or more orders of magnitude, and by reducing the amount of data needed to adapt models to new environments to tens to hundreds of labeled examples. (Image: DARPA)

|

|

Beyond the challenges of amassing labeled data, most ML models are brittle and prone to breaking when there are small changes in their operating environment. If changes occur in a room’s acoustics or a microphone’s sensors, for example, a speech recognition or speaker identification system may need to be retrained on an entirely new data set. Adapting or modifying a model can take almost as much time and energy as creating one from scratch.

|

|

To reduce the upfront cost and time associated with training and adapting an ML model, DARPA is launching a new program called Learning with Less Labels (LwLL). Through LwLL, DARPA will research new learning algorithms that require greatly reduced amounts of information to train or update.

|

|

“Under LwLL, we are seeking to reduce the amount of data required to build a model from scratch by a million-fold, and reduce the amount of data needed to adapt a model from millions to hundreds of labeled examples,” said Wade Shen, a DARPA program manager in the Information Innovation Office (I2O) who is leading the LwLL program. “This is to say, what takes one million images to train a system today, would require just one image in the future, or requiring roughly 100 labeled examples to adapt a system instead of the millions needed today.”

|

|

To accomplish its aim, LwLL researchers will explore two technical areas. The first focuses on building learning algorithms that efficiently learn and adapt. Researchers will research and develop algorithms that are capable of reducing the required number of labeled examples by the established program metrics without sacrificing system performance.

|

|

“We are encouraging researchers to create novel methods in the areas of meta-learning, transfer learning, active learning, k-shot learning, and supervised/unsupervised adaptation to solve this challenge,” said Shen.

|

|

The second technical area challenges research teams to formally characterize machine learning problems, both in terms of their decision difficulty and the true complexity of the data used to make decisions.

|

|

“Today, it’s difficult to understand how efficient we can be when building ML systems or what fundamental limits exist around a model’s level of accuracy. Under LwLL, we hope to find the theoretical limits for what is possible in ML and use this theory to push the boundaries of system development and capabilities,” noted Shen.

|

|

Interested proposers have an opportunity to learn more about the LwLL program during a Proposers Day, scheduled for Friday, July 13 from 9:30am-4:30pm ET at the DARPA Conference Center, located at 675 N. Randolph St., Arlington, Virginia, 22203. For additional information visit here. A full description of the program will be made available in a forthcoming Broad Agency Announcement.

|