| May 26, 2022 | |

A helping hand for robotic manipulator design (w/video) |

|

| (Nanowerk News) MIT researchers have created an interactive design pipeline that streamlines and simplifies the process of crafting a customized robotic hand with tactile sensors. | |

| Typically, a robotics expert may spend months manually designing a custom manipulator, largely through trial-and-error. Each iteration could require new parts that must be designed and tested from scratch. By contrast, this new pipeline doesn’t require any manual assembly or specialized knowledge. | |

| Akin to building with digital LEGOs, a designer uses the interface to construct a robotic manipulator from a set of modular components that are guaranteed to be manufacturable. The user can adjust the palm and fingers of the robotic hand, tailoring it to a specific task, and then easily integrate tactile sensors into the final design. | |

|

|

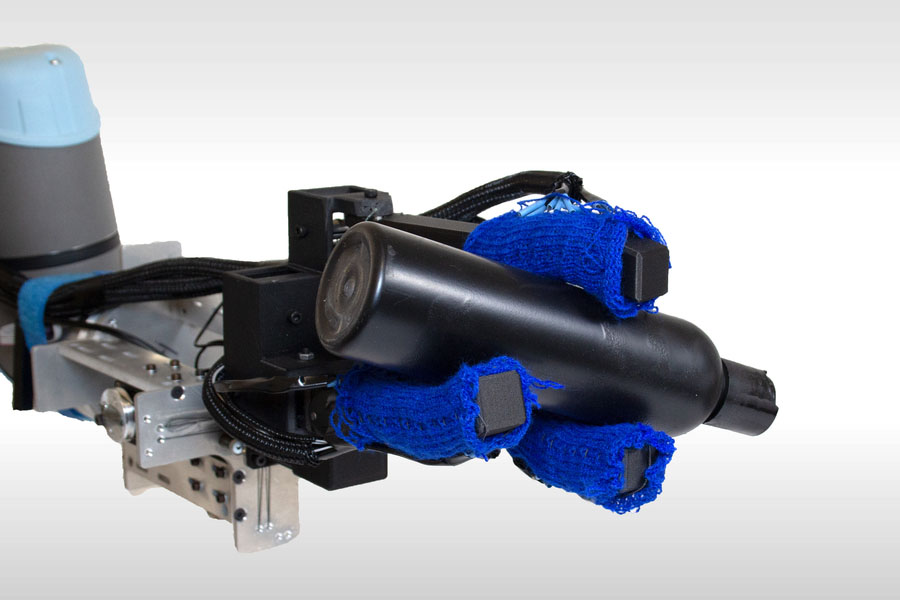

| MIT researchers have created an integrated design pipeline that enables a user with no specialized knowledge to quickly craft a customized 3D-printable robotic hand. (Image: Lara Zlokapa) | |

| Once the design is finished, the software automatically generates 3D printing and machine knitting files for manufacturing the manipulator. Tactile sensors are incorporated through a knitted glove that fits snugly over the robotic hand. These sensors enable the manipulator to perform complex tasks, such as picking up delicate items or using tools. | |

| “One of the most exciting things about this pipeline is that it makes design accessible to a general audience. Rather than spending months or years working on a design, and putting a lot of money into prototypes, you can have a working prototype in minutes,” says lead author Lara Zlokapa, who will graduate this spring with her master’s degree in mechanical engineering. | |

| Joining Zlokapa on the paper are her advisors Pulkit Agrawal, professor in the Computer Science and Artificial Intelligence Laboratory (CSAIL), and Wojciech Matusik, professor of electrical engineering and computer science. Other co-authors include CSAIL graduate students Yiyue Luo and Jie Xu, mechanical engineer Michael Foshey, and Kui Wu, a senior research scientist at Tencent America. The research is being presented at the International Conference on Robotics and Automation ("An Integrated Design Pipeline for Tactile Sensing Robotic Manipulators"). | |

Mulling over modularity |

|

| Before she began work on the pipeline, Zlokapa paused to consider the concept of modularity. She wanted to create enough components that users could mix and match with flexibility, but not so many that they were overwhelmed by choices. | |

| She thought creatively about component functions, rather than shapes, and came up with about 15 parts that can combine to make trillions of unique manipulators. | |

| The researchers then focused on building an intuitive interface in which the user mixes and matches components in a 3D design space. A set of production rules, known as graph grammar, controls how users can combine pieces based on the way each component, such as a joint or finger shaft, fits together. | |

| “If we think of this as a LEGO kit where you have different building blocks you can put together, then the grammar might be something like ‘red blocks can only go on top of blue blocks’ and ‘blue blocks can’t go on top of green blocks.’ Graph grammar is what enables us to ensure that each and every design is valid, meaning it makes physical sense and you can manufacture it,” she explains. | |

| Once the user has created the manipulator structure, they can deform components to customize it for a specific task. For instance, perhaps the manipulator needs fingers with slimmer tips to handle office scissors or curved fingers that can grasp bottles. | |

| During this deformation stage, the software surrounds each component with a digital cage. Users stretch or bend components by dragging the corners of each cage. The system automatically constrains those movements to ensure the pieces still connect properly and the finished design remains manufacturable. | |

Fits like a glove |

|

| After customization, the user identifies locations for tactile sensors. These sensors are integrated into a knitted glove that fits securely around the 3D-printed robotic manipulator. The glove is comprised of two fabric layers, one that contains horizontal piezoelectric fibers and another with vertical fibers. Piezoelectric material produces an electric signal when squeezed. Tactile sensors are formed where the horizontal and vertical piezoelectric fibers intersect; they convert pressure stimuli into electric signals that can be measured. | |

| “We used gloves because they are easy to install, easy to replace, and easy to take off if we need to repair anything inside them,” Zlokapa explains. | |

| Plus, with gloves, the user can cover the entire hand with tactile sensors, rather than embedding them in the palm or fingers, as is the case with other robotic manipulators (if they have tactile sensors at all). | |

| With the design interface complete, the researchers produced custom manipulators for four complex tasks: picking up an egg, cutting paper with scissors, pouring water from a bottle, and screwing in a wing nut. The wing nut manipulator, for instance, had one lengthened and offset finger, which prevented the finger from colliding with the nut as it turned. That successful design required only two iterations. | |

| The egg-grabbing manipulator never broke or dropped the egg during testing, and the paper-cutting manipulator could use a wider range of scissors than any existing robotic hand they could find in the literature. | |

| But as they tested the manipulators, the researchers found that the sensors create a lot of noise due to the uneven weave of the knitted fibers, which hampers their accuracy. They are now working on more reliable sensors that could improve manipulator performance. | |

| The researchers also want to explore the use of additional automation. Since the graph grammar rules are written in a way that a computer can understand, algorithms could search the design space to determine optimal configurations for a task-specific robotic hand. With autonomous manufacturing, the entire prototyping process could be done without human intervention, Zlokapa says. | |

| “Now that we have a way for a computer to explore this design space, we can work on answering the question of, ‘Is the human hand the optimal shape for doing everyday tasks?’ Maybe there is a better shape? Or maybe we want more or fewer fingers, or fingers pointing in different directions? This research doesn’t fully answer that question, but it is a step in that direction,” she says. | |

| “The paper presents an exciting idea and an elegant system design,” says Wenzhen Yuan, assistant professor in the Robotics Institute at Carnegie Mellon University, who was not involved with this research. “It provides a new way of thinking about robot design in this new era where customization and versatility of robots are of high importance. It made a good bridge between mechanical design, computer graphics, and computational fabrication. I can foresee many applications of the system and lots of potential in the methodology.” |

| Source: By Adam Zewe, MIT |

We curated a list with the (what we think) 10 best robotics and AI podcasts – check them out!

Also check out our Smartworlder section with articles on smart tech, AI and more.