| Apr 14, 2023 | |

Boosting AI with optimized phase change memory |

|

| (Nanowerk Spotlight) Phase change memory (PCM) is a type of non-volatile memory technology that stores data at the nanoscale by changing the phase of a specialized material between crystalline and amorphous states. In the crystalline state, the material exhibits low electrical resistance, while in the amorphous state, it has high resistance. By applying different heat and rapidly cooling pulses, the phase can be switched, allowing data to be written and read as binary values (0s and 1s) or continuous analog values based on the material's resistance. | |

| Phase change memory is an emerging technology with great potential for advancing analog in-memory computing, particularly in deep neural networks and neuromorphic computing. Various factors, such as resistance values, memory window, and resistance drift, affect the performance of PCM in these applications. So far, it has been challenging for researchers to compare PCM devices for in-memory computing based solely on their various device characteristics, which often had trade-offs and correlations. | |

| Another challenge is that analog in-memory computing can greatly improve the speed and reduce the power consumption for AI computing, but it may suffer from reduced accuracy due to imperfection in the analog memory devices. | |

| New research, published in Advanced Electronic Materials ("Optimization of Projected Phase Change Memory for Analog In-Memory Computing Inference"), addresses these issues by 1) extensively benchmarking PCM devices in large neural networks, offering valuable guidelines for optimizing these devices in the future, and 2) improving and optimizing analog memory devices made with phase change materials, ultimately enhancing accuracy for AI computing. | |

| Ning Li, who at the time was working at the IBM Research in Yorktown Heights and Albany (now an Associate Professor at Lehigh University), the first author of the study, and his IBM colleagues explain: "First, we discovered that many device characteristics can be tuned systematically tuned systematically using a liner layer introduced in our prior work. Second, we found a way to optimize these device characteristics from a system point of view using extensive system-level simulations.” These two advances together enabled the team to identify the best devices." | |

| In this work, the team created models to represent the drift and noise behavior of PCM devices. They used these models to assess the performance of these devices in neural network inference applications. They evaluated the performance of large neural networks with tens of millions of weights (i.e., the parameters within a neural network that determine the strength of the connections between neurons; In the case of PCM-based analog in-memory computing, the weights are stored as resistance values in the PCM devices) using PCM devices both with and without projection liners (additional layers introduced into the PCM device structure, which are made of a non-phase change material), testing a variety of deep neural networks (DNNs) and datasets at multiple time-steps. | |

|

|

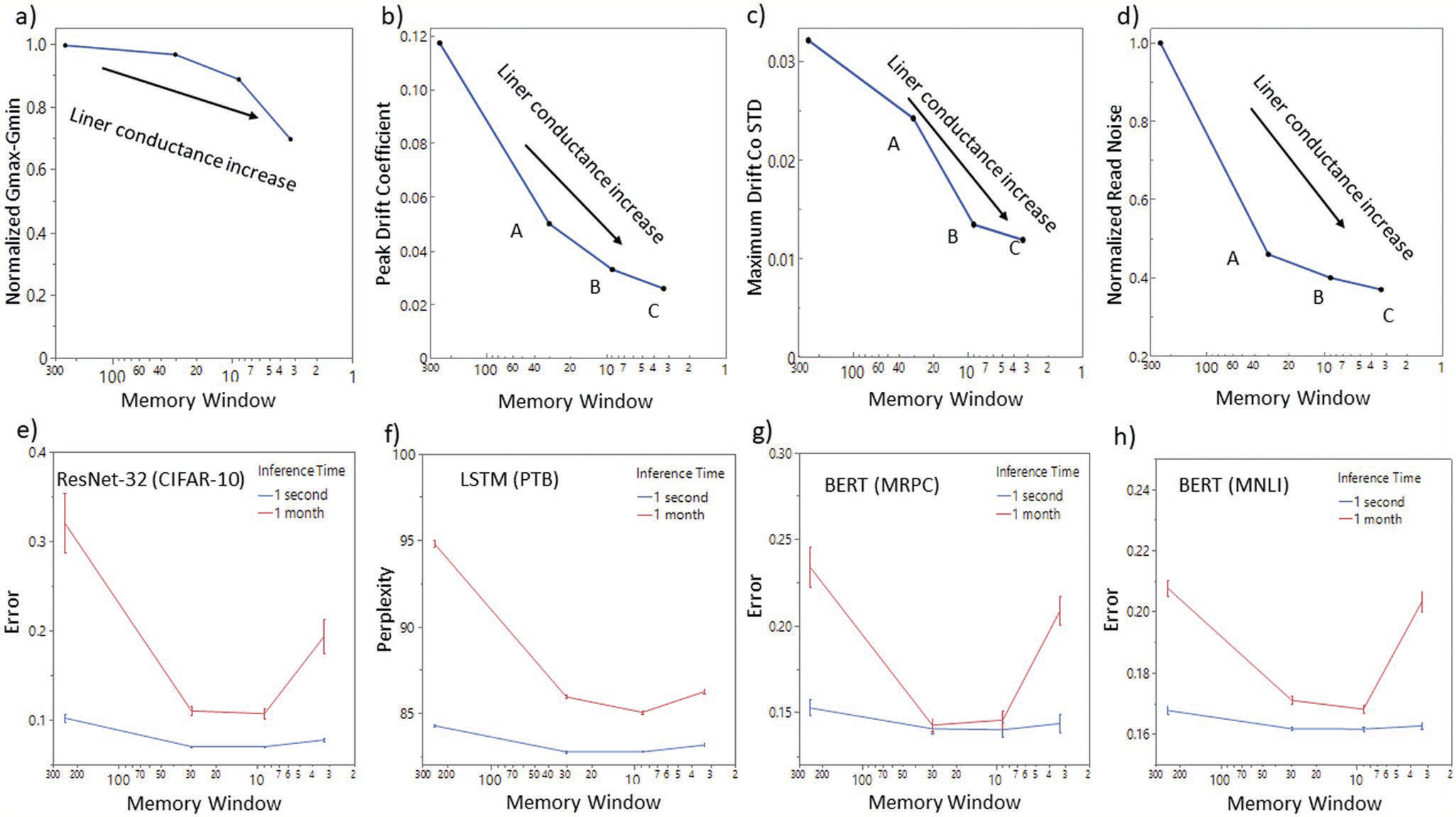

| Measured characteristics of PCM device and their impact on network accuracy as a function of PCM memory window a) programming range Gmax–Gmin, b) peak drift coefficient, c) standard deviation of drift coefficient, d) normalized read noise, e) ResNet-32 (CIFAR-10) inference error at short term (1 second) and long term (1 month) after programming, f) LSTM (PTB) inference error at 1 second and 1 month after programming, g) BERT (MRPC) inference error at 1 second and 1 month after programming, h) BERT (MNLI) inference error at 1 second and 1 month after programming. (Reprinted with permission by Wiley-VCH Verlag) (click on image to enlarge) | |

| The study finds that devices with projection liners perform well across various DNN types, including recurrent neural networks (RNNs), convolutional neural networks (CNNs), and transformer-based networks. The researchers also examined the impact of different device characteristics on network accuracy and identified a range of target device specifications for PCM with liners that can lead to further improvements. | |

| Unlike previous reports on PCM devices for AI computing, this work ties device results to the end results of computing chips with large and useful deep neural networks. Dr. Li explains that PCM devices for in-memory computing are difficult to compare for AI applications by only using device characteristics. The study provides a solution to this problem by offering extensive benchmarking of PCM devices in various networks under various conditions of weight mapping and guidelines for PCM device optimization. | |

| By being able to show that device characteristics can be tuned continuously, and that these characteristics are correlated with one another, systematic optimization of the devices becomes possible. | |

| Using their optimization strategy, the researchers demonstrated that they can achieve much better accuracy for both short-term and long-term programming. They significantly reduced the effects of PCM drift and noise on deep neural networks, improving both initial accuracy and long-term accuracy. | |

| "Potential applications of our work include improved speed, reduced power, and reduced cost in language processing, image recognition, and even broader AI applications, such as ChatGPT," Li points out. | |

| As a result of this work, the researchers envision that large neural network computation will become faster, greener, and cheaper. The next stages in their investigations include further optimizing PCM devices and implementing them in computer chips. | |

| "The future direction for this research field is to enable real products that customers find useful," Li concludes. "Although analog systems use imperfect analog devices, they offer significant advantages in speed, power, and cost. The challenge lies in identifying suitable applications and enabling them." | |

By

Michael

Berger

– Michael is author of three books by the Royal Society of Chemistry:

Nano-Society: Pushing the Boundaries of Technology,

Nanotechnology: The Future is Tiny, and

Nanoengineering: The Skills and Tools Making Technology Invisible

Copyright ©

Nanowerk LLC

By

Michael

Berger

– Michael is author of three books by the Royal Society of Chemistry:

Nano-Society: Pushing the Boundaries of Technology,

Nanotechnology: The Future is Tiny, and

Nanoengineering: The Skills and Tools Making Technology Invisible

Copyright ©

Nanowerk LLC

|

|

|

Become a Spotlight guest author! Join our large and growing group of guest contributors. Have you just published a scientific paper or have other exciting developments to share with the nanotechnology community? Here is how to publish on nanowerk.com. |

|