| Jan 13, 2021 |

Using neural networks for faster X-ray imaging

(Nanowerk News) It sounds like a dispatch from the distant future: a computer system that can not only reconstruct images from reams of X-ray data at hundreds of times the speed of current methods, but can learn from experience and design better and more efficient ways of calculating those reconstructions. But with the next generation of X-ray light sources on the horizon — and with them, a massive uptick in the amount of data they will generate — scientists have a reason to pursue that future, and quickly.

|

|

In a recent paper published in Applied Physics Letters ("AI-enabled high-resolution scanning coherent diffraction imaging"), a team of computer scientists from two U.S. Department of Energy (DOE) Office of Science User Facilities at the DOE’s Argonne National Laboratory — the Advanced Photon Source (APS) and Center for Nanoscale Materials (CNM) — have demonstrated the use of artificial intelligence (AI) to speed up the process of reconstructing images from coherent X-ray scattering data.

|

|

Traditional X-ray imaging techniques (like medical X-ray images) are limited in the amount of detail they can provide. This has led to the development of coherent X-ray imaging methods that are capable of providing images from deep within materials at a few nanometer resolution or less. These techniques generate X-ray images without the need for lenses, by diffracting or scattering the beam off of samples and directly onto detectors.

|

|

The data captured by those detectors has all the information needed to reconstruct high fidelity images, and computational scientists can do this with advanced algorithms. These images can then help scientists design better batteries, build more durable materials and develop better medications and treatments for diseases.

|

|

The process of using computers to assemble images from coherent scattered X-ray data is called ptychography, and the team used a neural network that learns how to pull that data into a coherent form. Hence the name of their innovation: PtychoNN.

|

|

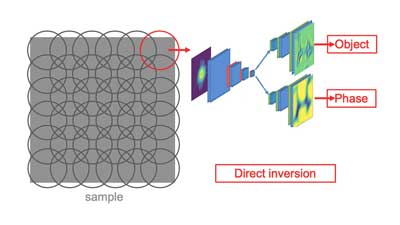

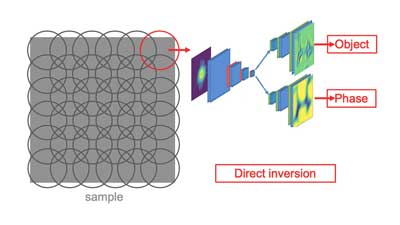

| PtychoNN uses AI techniques to reconstruct both the amplitude and the phase from X-ray data, providing images that scientists can use. (Image: Mathew Cherukara / Argonne National Laboratory.)

|

|

“The genesis of this goes back a few years,” said Mathew Cherukara, the first author on the paper and a computational scientist who has worked at both the APS and CNM.

|

|

The APS is scheduled to undergo a massive upgrade in the coming years, which will increase the brightness of its X-ray beams by up to 500 times. A similar increase in data is expected, and the current computational methods of reconstructing images are already struggling to keep pace.

|

|

“We were concerned that after the upgrade, data rates will be too large for traditional methods of imaging analysis to work,” Cherukara said. ?“Artificial intelligence methods can keep up, and produce images hundreds of times faster than the traditional method.”

|

|

PtychoNN also solves one of the biggest issues facing computer scientists working on X-ray scattering experiments: the problem of phase.

|

Challenge accepted

|

|

Imagine an Olympic-size swimming pool, full of swimmers. Now imagine looking up at the reflection of light off of the water on the ceiling of the building, just above the pool. If someone asked you to figure out, just from those flickers of light on the ceiling, where the swimmers are in the pool, could you do it?

|

|

That, according to Martin Holt, is what reconstructing an image from coherent X-ray scattering data is like. Holt is an interim group leader at CNM and one of the authors of the PtychoNN paper. His job is to use sophisticated computer systems to build pictures out of scattered photon data — or, essentially, to look at the water’s reflection on the ceiling and make an image of the swimmers.

|

|

When an X-ray beam strikes a sample, the light is diffracted and scatters, and the detectors around the sample collect that light. It’s then up to Holt and scientists like him to turn that data into information scientists can use. The challenge, however, is that while the photons in the X-ray beam carry two pieces of information — the amplitude, or the brightness of the beam, and the phase, or how much the beam changes when it passes through the sample — the detectors only capture one.

|

|

“Because the detectors can only detect amplitude and they cannot detect the phase, all that information is lost,” Holt said ?“So we need to reconstruct it.”

|

|

The good news is, scientists can do it. The bad news is, the process is slower than those scientists would like. Part of the challenge is on the data acquisition end. In order to reconstruct the phase data from coherent diffraction imaging experiments, the current algorithms require scientists to collect much more amplitude data from their sample, which takes longer. But the actual reconstruction from that data takes some time as well.

|

|

This is where PtychoNN comes in. Using AI techniques, the team of researchers has demonstrated that computers can be taught to predict and reconstruct images from X-ray data, and can do it 300 times faster than the traditional method. More than that, though, PtychoNN is able to speed up the process on both ends.

|

|

“What we are proposing doesn’t require the overlapping information traditional algorithms need,” said Tao Zhou, a postdoc with Argonne’s X-ray Science Division (XSD) and a co-author on the paper. ?“The AI can be trained to predict the image on a point to point basis.”

|

Higher learning

|

|

Rather than use simulated images to train the neural network, the team used real X-ray data taken at beamline 26-ID at the APS, operated by CNM. Since that beamline is used for nanoscience, its optics focus the X-ray beam down to a very small size. For this experiment, the team imaged an object — in this case, a piece of tungsten etched with random features — and presented that system with less information than would normally be needed to reconstruct a full image.

|

|

“There are two key takeaways,” Cherukara said. ?“If data acquisition is the same as today’s method, PtychoNN is 300 times faster. But it can also reduce the amount of data that needs to be acquired to produce images.”

|

|

Cherukara noted that a reconstruction performed with less information naturally leads to an image of poorer quality, but you will still get an image, where traditional algorithmic methods would not be able to produce one. He said scientists sometimes come up against time constraints that do not allow for a full data set to be captured, or damaged samples in which the full data set is not possible, and PtychoNN can generate usable images even in those circumstances.

|

|

All of this efficiency, the team said, bodes well for PtychoNN as a new way forward after the APS Upgrade. This approach will allow data analysis and image recovery to keep up with the increase in data. The next step is to move beyond proof-of-concept, generate full 3D and time-resolved images, and incorporate PtychoNN into the APS workflow.

|

|

“What’s next is showing that it works on more data sets and implementing it for everyday use,” said Ross Harder, physicist and lead developer of coherent diffraction imaging instrumentation with XSD, and a co-author on the paper.

|

|

Doing that, Cherukara said, could even result in a self-improving system that is constantly learning from every diffraction experiment at the APS. He envisions a program running silently in the background, becoming more efficient with each data set it observes.

|

|

For Holt, an innovation like PtychoNN is a natural outgrowth of the way Argonne combines resources to solve problems.

|

|

“We have great computing resources at Argonne, and one of the best light sources in the world, and a center that focuses on nanotechnology,” he said. ?“That’s the real strength of Argonne, that these are all at the same lab.”

|