| May 08, 2024 | |

Scientists develop energy-efficient memristive hardware for transparent AI decision-making |

|

| (Nanowerk Spotlight) Artificial intelligence has rapidly progressed in recent years, transforming fields from healthcare to finance to transportation. AI systems can now match or exceed human-level performance on many complex tasks. However, as AI becomes more ubiquitous, a critical challenge has emerged - the "black box" problem. Most cutting-edge AI systems, powered by deep neural networks, make decisions in ways that are extremely difficult for humans to understand or interpret. | |

| This lack of transparency poses major risks as AI is deployed in high-stakes domains. How can we trust the judgments of AI systems in healthcare, criminal justice, or autonomous vehicles if we don't know the reasoning behind their decisions? Biased or unfair AI outcomes could go undetected. Harmful or unintended behaviors could emerge. For AI to be safely integrated into society, developing more transparent and explainable AI systems is crucial. | |

| In pursuit of this goal, the field of explainable AI (XAI) has emerged in recent years. XAI aims to develop AI systems that can provide clear insights into how they arrive at decisions. By opening up the black box, XAI could enable greater human understanding, trust, and oversight of AI. | |

| However, implementing XAI in practice has proven challenging. Generating explanations for the behavior of complex neural networks requires significant computational overhead. The iterative perturbations and analysis needed to identify which factors drive AI decisions are highly resource intensive. As a result, deploying XAI at scale has so far been infeasible. | |

| Now, a team of researchers in South Korea has developed a novel hardware solution that could help unlock the potential of XAI. As reported in the journal Advanced Materials ("Memristive Explainable Artificial Intelligence Hardware"), they have created an XAI hardware framework powered by memristors - nanoscale electrical components that exhibit unique properties. By performing XAI operations directly in hardware rather than software, their "memristive XAI" system achieves dramatic gains in energy efficiency compared to conventional approaches. | |

|

|

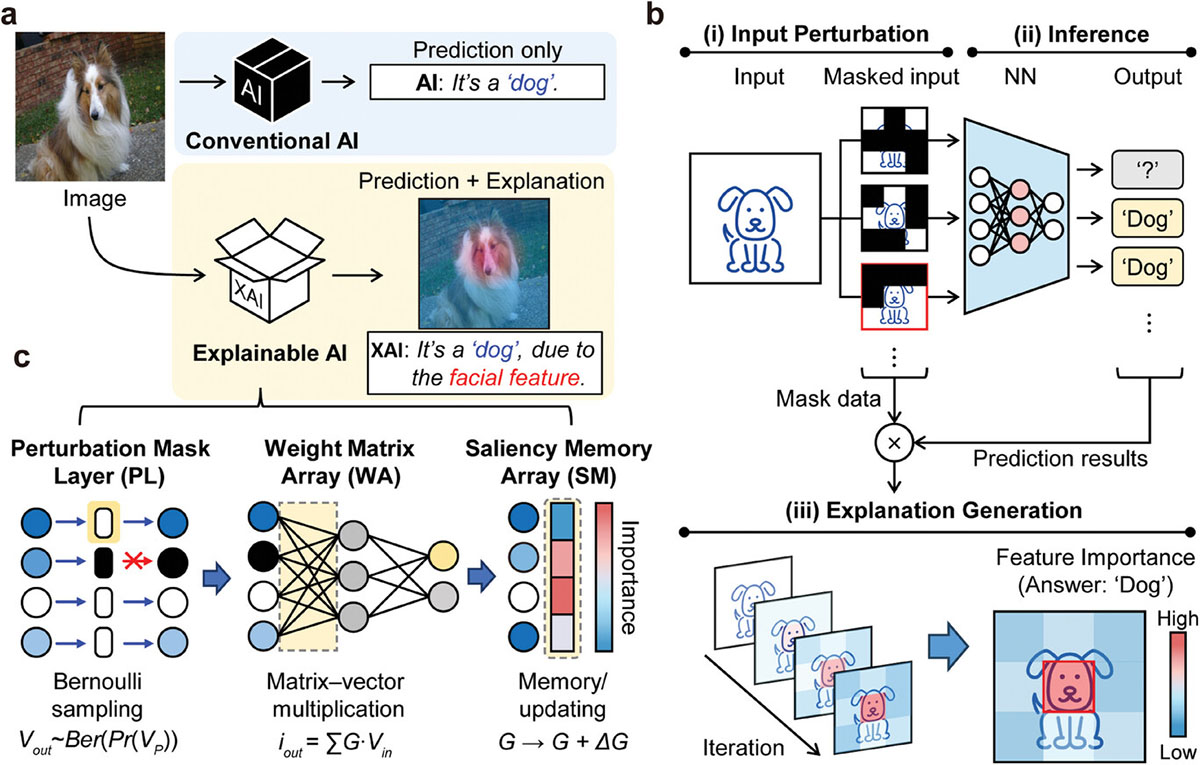

| Overview of perturbation-based explainable AI (XAI) system. a) A schematic comparing a conventional AI system and an XAI system. b) A schematic overview of the perturbation-based XAI algorithm, including three core operation steps. c) The required hardware for the three core functions in the perturbation-based XAI: perturbation mask layer (PL) for input perturbation, weight matrix array (WA) for matrix–vector multiplication in inference, and saliency memory array (SM) for signal integration in explanation generation. These functions can be built using scalable two-terminal memristors. (Image: Reprinted from DOI:10.1002/adma.202400977, CC BY) (click on image to enlarge) | |

| "Memristors offer various nonlinear and dynamic electrical characteristics based on physical phenomena," explained Professor Kyung Min Kim, who led the research. "This enables memristive hardware to perform complex functions like the perturbations and analysis required for XAI in a much simpler and more efficient way." | |

| The researchers' memristive XAI system incorporates three distinct types of memristors fabricated in crossbar arrays. One type, based on niobium oxide, leverages stochastic switching behavior to generate random input perturbations. Another, using hafnium oxide, performs the matrix computations that underpin neural network inference. The third integrates these signals over time to identify which input features had the greatest influence on the AI model's decision. | |

| To demonstrate their system, the researchers performed image recognition tasks on simple test images as well as a more complex dataset of handwritten digits. They showed that the memristive XAI system could successfully identify which pixels were most important in reaching an image classification decision. Crucially, it did so using only 4.32% as much energy as performing the same task in software. | |

| The researchers say that this massive gain in efficiency is the key breakthrough. "The XAI algorithms are very computationally intensive," said Dr. Han Chan Song, first author of the study. "We've shown that by moving these functions into hardware, we can implement XAI in a much more scalable and sustainable way. This could enable real-time explanations for far more complex AI models than was previously feasible." | |

| Looking ahead, the researchers see several important steps to build on their proof-of-concept. They plan to scale up the memristor arrays to tackle XAI for the large neural networks used in real-world applications. They also hope to collaborate with neuroscientists to make the explanation outputs even more intuitive and understandable for human users. Ultimately, they envision their memristive XAI hardware being integrated into smart sensors and edge computing devices. | |

| "We're still in the early stages, but memristive XAI could become a key enabler for transparent and trustworthy AI," said Prof. Kim. "As AI grows more sophisticated and ubiquitous, the need to understand its inner workings will only grow more urgent. Hardware-based solutions like this could help us keep pace and realize the full potential of AI to benefit society." | |

| If Kim and his colleagues' vision pans out, memristive hardware could one day power the explainable AI systems inside our smartphones, wearables, vehicles, and smart devices. Just as the human brain achieves its incredible feats of perception and cognition through the dense networks of neurons and synapses, memristor arrays may be ideally suited to unravel the mysteries of their artificial counterparts. In a future of pervasive AI, such transparency will be essential to ensure the technology remains a beneficial and trusted part of our lives. | |

By

Michael

Berger

– Michael is author of three books by the Royal Society of Chemistry:

Nano-Society: Pushing the Boundaries of Technology,

Nanotechnology: The Future is Tiny, and

Nanoengineering: The Skills and Tools Making Technology Invisible

Copyright ©

Nanowerk LLC

By

Michael

Berger

– Michael is author of three books by the Royal Society of Chemistry:

Nano-Society: Pushing the Boundaries of Technology,

Nanotechnology: The Future is Tiny, and

Nanoengineering: The Skills and Tools Making Technology Invisible

Copyright ©

Nanowerk LLC

|

|

|

Become a Spotlight guest author! Join our large and growing group of guest contributors. Have you just published a scientific paper or have other exciting developments to share with the nanotechnology community? Here is how to publish on nanowerk.com. |

|