| Jun 15, 2021 | |

A robotic hand that updates itself |

|

| (Nanowerk News) Manipulation is one of the most common actions that allow robots to physically interact with the world. As we see an increasing amount of real-world applications being deployed in recent years, robotic manipulation is still a challenging problem that involves many subproblems yet to be addressed. | |

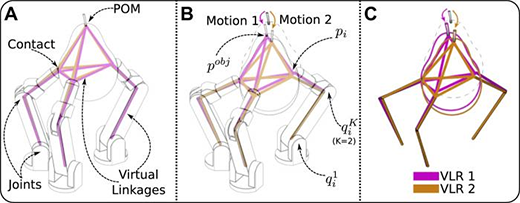

| To capture the sophisticated mechanics of in-hand manipulation, a team of researchers in the lab of Prof. Aaron Dollar, professor of mechanical engineering & materials science & computer science, designed a robotic system that relies on its ability to map out how it interacts with its environment to adapt and update its own internal model. To give the hand precise control, the researchers derived algorithms for the system to self-identify necessary parameters through exploratory hand-object interactions. | |

| To demonstrate the results, recently published in Science Robotics ("Manipulation for self-Identification, and self-Identification for better manipulation"), the research team - postdoctoral associate Kaiyu Hang, graduate students Walter G. Bircher and Andrew S. Morgan, and Dollar - had the hand perform a number of tasks, including cup-stacking, writing with a pen, and grasping various objects. | |

| Here, lead author Kaiyu Hang explains the work and its significance: | |

| Robot manipulation in real-world, unstructured environments remains one of the most important unsolved problems in robotics, due to its crucial role in many relevant domains, such as manufacturing, healthcare, and household services. | |

| Traditionally, model-based robotic manipulation systems are built with model parameters that are either known a priori or can be obtained directly from the available sensing modalities, including vision sensors, tactile sensors, force/torque sensors, and proprioceptive sensors etc. However, a major challenge sitting at the core of this problem is that there are many model parameters, due to the lack of prior knowledge or limited by the available sensors, as well as physical uncertainties, not directly available to the robot in a large number of task scenarios. | |

|

|

| (Image: Yale University) | |

| My research has been centered around the development of model-based planning and control algorithms for robotic manipulation systems. I have always been interested in the scientific questions related to how to build robust and generalizable manipulation frameworks. In particular, I always wonder how we can push the boundaries of robotic manipulation beyond the point of where we, or incorporated sensors, have to provide all necessary model parameters to enable each and every manipulation skill. | |

| Instead, I would like to empower robots with the abilities to acquire manipulation-relevant model parameters and knowledge through their own exploratory actions, even when the prior information and sensing modalities are very limited. These quests required and inspired me to look into the research of “Manipulation for Self-Identification, and Self-Identification for Better Manipulation.” | |

What’s the significance of this work? |

|

| The proposed concept of self-identification will shift the traditional paradigm of robotic manipulation from “sense, plan, act” to “act, sense, plan,” which is more naturally seen in almost every manipulation task we humans perform, because we can rarely expect to fully acquire all knowledge of a complex system before we interact with it and feel how it physically responds. | |

| The proposed framework allows for adaptive estimation schemes to offer robustness in accommodating limited sensing capabilities and various uncertainties, as well as computational scalability by focusing on estimating task-relevant model parameters only. For example, in this work we showed that we can self-identify hand-object systems and then precisely manipulate it without even modeling the object’s geometry. | |

| Rather than handling a number of sensing modalities all at the same time for building and maintaining active control of manipulation models, the proposed framework is able to self-identify key information, without necessarily direct sensing, via interactive perception based on limited or even minimal sensing. By leveraging exploratory actions, we enable robots to rely more on themselves, rather than human experts or more sensors, to provide a larger number of more generalizable and robust manipulation skills. | |

Who might disagree with this? |

|

| As our proposed work is pushing the boundaries of model-based manipulation systems, and can at the same time benefit or be incorporated by learning-based manipulation systems, we do not see any group of research efforts to disagree with our general framework, although we see that there are a lot of aspects of our work that need to be improved. | |

What excites you most about this finding? |

|

| The most interesting aspect of this work is that we are shifting the paradigm of robotic manipulation from “sense, plan, act” to “act, sense, plan.” We expect this paradigm shift to enable a large number of manipulation tasks that were previously very difficult or even impossible. Traditionally, limited by sensing, physical uncertainties, or prior knowledge, robots’ manipulation skills are always limited to somehow “quasistatic” schemes. With the effort of self-identification, we hope to empower robots to be more “dynamic” in manipulation. | |

| On another hand, the ability of self-identification can largely improve the capabilities of cheap robot designs, such as those 3D-printed, soft, sensorless robots, to be able to more precisely self-identify, plan, and control their manipulation actions. This will make robots more accessible in many more application scenarios, and facilitate the deployment of robots to more dangerous environments where expensive but fragile hardware is not preferred. | |

What are the next steps with this, for you or other researchers? |

|

| In addition to hand-object systems investigated in this work, we will study how to enable self-identification for more general manipulation systems ranging from hands and arms to dual-arm and mobile manipulation systems, and even aerial manipulation platforms. With safety and efficiency in mind, we plan to continue our research by looking into more efficient and robust adaptive estimation approaches. In particular, we seek to develop generic self-identification frameworks that are generalizable and transferable across different robot embodiments, while providing efficient adaptive estimation capabilities to systems where real-time performance is concerned. | |

| Meanwhile, we envision more research to be benefited by self-identification in terms of overcoming limited sensing and physical uncertainties. Not limited to model-based approaches, we optimistically expect that the concept of self-identification will facilitate the general progress of the research in robotic manipulation, including deep learning-based systems. |

| Source: Yale University |

We curated a list with the (what we think) 10 best robotics and AI podcasts – check them out!

Also check out our Smartworlder section with articles on smart tech, AI and more.