| Feb 02, 2024 | |

Butterfly mating behaviors inspire next level brain-like computing |

|

| (Nanowerk Spotlight) Artificial intelligence systems have historically struggled to integrate and interpret information from multiple senses the way animals intuitively do. Humans and other species rely on combining sight, sound, touch, taste and smell to better understand their surroundings and make decisions. However, the field of neuromorphic computing has largely focused on processing data from individual senses separately. | |

| This unisensory approach stems in part from the lack of miniaturized hardware able to co-locate different sensing modules and enable in-sensor and near-sensor processing. Recent efforts have targeted fusing visual and tactile data. However, visuochemical integration, which merges visual and chemical information to emulate complex sensory processing such as that seen in nature—for instance, butterflies integrating visual signals with chemical cues for mating decisions—remains relatively unexplored. Smell can potentially alter visual perception, yet current AI leans heavily on visual inputs alone, missing a key aspect of biological cognition. | |

| Now, researchers at Penn State University have developed bio-inspired hardware that embraces heterogeneous integration of nanomaterials to allow the co-location of chemical and visual sensors along with computing elements. This facilitates efficient visuochemical information processing and decision-making, taking cues from the courtship behaviors of a species of tropical butterfly. | |

| The work helps overcome key barriers holding back multisensory computing. It also has significant implications in robotics and other fields reliant on situational understanding and responding to complex environments. | |

|

|

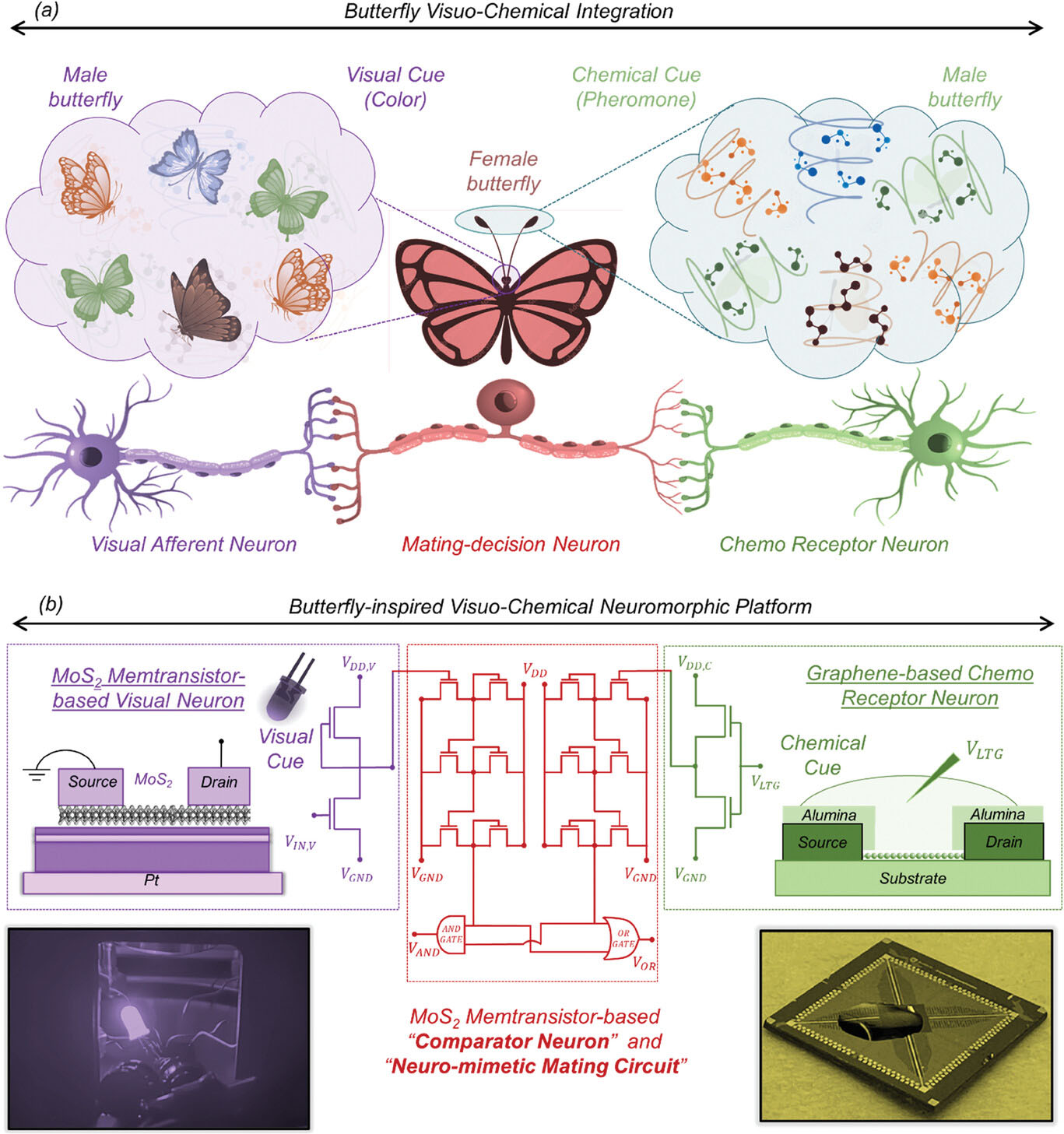

| Butterfly-inspired visuo-chemical integration. a) A simplified abstraction of visual and chemical stimuli from male butterflies and visuochemical integration pathway in female butterflies. b) Butterfly-inspired neuromorphic hardware comprising of monolayer MoS2 memtransistor-based visual afferent neuron, graphene-based chemoreceptor neuron, and MoS2 memtransistor-based neuro-mimetic mating circuits. (Reprinted with permission from Wiley-VCH Verlag) (click on image to enlarge) | |

| In the paper published in Advanced Materials ("A Butterfly-Inspired Multisensory Neuromorphic Platform for Integration of Visual and Chemical Cues"), the researchers describe creating their visuochemical integration platform inspired by Heliconius butterflies. During mating, female butterflies rely on integrating visual signals like wing color from males along with chemical pheromones to select partners. Specialized neurons combine these visual and chemical cues to enable informed mate choice. | |

| To emulate this capability, the team constructed hardware encompassing monolayer molybdenum disulfide (MoS2) memtransistors serving as visual capture and processing components. Meanwhile, graphene chemitransistors functioned as artificial olfactory receptors. Together, these nanomaterials provided the sensing, memory and computing elements necessary for visuochemical integration in a compact architecture. | |

| Configuring the Platform Hardware The visual afferent neuron role was carried out by connecting two MoS2 memtransistors to construct an inverter circuit exploiting their photogating properties. Flashes of light create persistent shifts in the transistors’ behavior. Pulsing illumination generated analog electrical signals capturing essential details about the visual stimuli. | |

| Two graphene chemitransistors wired in series comprised the artificial chemoreceptor neuron. Dropping various chemical solutions onto the graphene surfaces served as inputs. A liquid top gate effect allowed channel conductances to be tuned by applied biases. Gradual evaporation of the solutions created smoothly changing electrical outputs capturing aspects of the chemical stimuli over time. | |

| The team also constructed comparator neurons from cascaded MoS2 inverter stages. These transform the analog receptor outputs into digital bits for easier manipulation by downstream logic circuits. Adjusting transistor thresholds enabled modifying decision boundaries and response timing. | |

| The researchers could encode stimulus intensity and wavelength for vision and distinguish between chemical concentrations by mapping sensory characteristics onto the timing of digital state changes. Such spike timing representations resemble biologically observed neural coding. | |

| With the hardware modules established, the team showcased visuochemial integration by emulating how female Heliconius butterflies assess male partners using vision and smell. The mating choices depend on wing coloration vividly signaling fitness. But they are also swayed by the specific composition of pheromones emitted. | |

| The researchers demonstrated that by combining simple AND and OR logic operations carried out with MoS2 circuits, flexible decision policies taking both visual and chemical factors into account could be implemented. More nuanced strategies are possible by designing additional appropriate algorithms. | |

| Butterfly-inspired demonstrations were intended to validate system capabilities, not necessarily match actual insect behavior. Nonetheless, the platform’s configurability showcases how it could potentially replicate observed animal multisensory processing once properly trained with biological data. | |

| While mating butterflies served as inspiration, the developed technology has much wider relevance. It represents a significant step toward overcoming the reliance of artificial intelligence on single data modalities. Enabling integration of multiple senses can greatly improve situational understanding and decision-making for autonomous robots, vehicles, monitoring devices and other systems interacting with complex environments. | |

| The work also helps progress neuromorphic computing approaches seeking to emulate biological brains for next-generation ML acceleration, edge deployment and reduced power consumption. In nature, cross-modal learning underpins animals’ adaptable behavior and intelligence emerging from brains organizing sensory inputs into unified percepts. This research provides a blueprint for hardware co-locating sensors and processors to more closely replicate such capabilities. | |

| Looking forward, the nanomaterial integration strategy displayed serves as a foundation for incorporating additional sensing modes like thermal, tactile or acoustic alongside existing chemical and visual coverage. With computing elements already resident on-chip thanks to leveraged memristive and electrochemical properties, this hardware fusion concept could ultimately recreate much more of our sophisticated yet compact biological processing hardware. | |

| The researchers have demonstrated a promising first step toward multisensory integration in neuromorphic hardware by showing their visuochemical platform can leverage different sensing nanomaterials to emulate aspects of biological decision-making. While the butterfly courtship behaviors served as convenient bio-inspiration to validate system capabilities, the developed technology has wider relevance for overcoming reliance on single data modalities in artificial intelligence systems. Co-locating sensors and processors also moves toward neuromorphic designs that more closely resemble innate cross-modal biological processing. | |

| The authors conclude that this foundational work shows the value of a cross-disciplinary approach combining insights from various fields to map sensory capabilities onto adaptable hardware. It provides a basic architecture for incorporating multiple sensing modalities along with resident computing power. The authors note that next steps involve building upon these conceptual demonstrations with more complex circuits and algorithms to fully realize the approach’s potential. However, realization of longer-term possibilities for expanding sensorial coverage and full-scale applications awaits considerable additional innovation built upon this groundwork. | |

By

Michael

Berger

– Michael is author of three books by the Royal Society of Chemistry:

Nano-Society: Pushing the Boundaries of Technology,

Nanotechnology: The Future is Tiny, and

Nanoengineering: The Skills and Tools Making Technology Invisible

Copyright ©

Nanowerk LLC

By

Michael

Berger

– Michael is author of three books by the Royal Society of Chemistry:

Nano-Society: Pushing the Boundaries of Technology,

Nanotechnology: The Future is Tiny, and

Nanoengineering: The Skills and Tools Making Technology Invisible

Copyright ©

Nanowerk LLC

|

|

|

Become a Spotlight guest author! Join our large and growing group of guest contributors. Have you just published a scientific paper or have other exciting developments to share with the nanotechnology community? Here is how to publish on nanowerk.com. |

|